Release 2024.10

Release Information

- Release date of Data Quality & Observability Classic 2024.10: October 29, 2024

- Release notes publication date: September 23, 2024

Warning

Some customers have encountered issues while upgrading to Data Quality & Observability Classic 2024.10 due to a change in our flyway library that is not backwards compatible. The fix for this issue is included in the Data Quality & Observability Classic 2024.11.1 patch. As always, we recommend backing up and restoring your Metastore before upgrading Data Quality & Observability Classic versions.

Note The above issue only impacts upgrades to Data Quality & Observability Classic 2024.10. New installations will not encounter this issue.

Enhancements

Pushdown

- SAP HANA Pushdown is now generally available.

- When creating and running DQ Jobs on SQL Server Pushdown connections, you can now perform schema, profile, and rules checks.

- You can now scan for fuzzy match duplicates in DQ Jobs created on BigQuery Pushdown connections.

- You can now scan for numerical outliers in DQ Jobs created on Trino Pushdown connections.

- DQ Jobs created on Snowflake Pushdown connections now support union lookback for advanced outlier configurations.

- DQ Jobs created on Snowflake Pushdown connections now support source to target validation to ensure data moves consistently through your data pipeline and identify changes when they occur.

Integration

- You can now define custom integration data quality rules, which are also known as aggregation paths, in the Collibra Platform operating model setting to allow you to view data quality scores for assets other than databases, schemas, tables, and columns.

- To allow you to manage the scope of the DGC resources to which OAuth can grant access during the integration, a new OAuth parameter in the Web ConfigMap is now set to

DQ_DGC_OAUTH_DEFAULT_REQUEST_SCOPE: "dgc.global-manage-all-resources"by default. This configuration grants Data Quality & Observability Classic access via OAuth to all DGC resources during the integration. For more granular control over the DGC resources to which Data Quality & Observability Classic is granted access via OAuth, we plan to introduce additional allowable values in a future release. - Users of the Quality tab in Collibra Platform who do not have a Data Quality & Observability Classic account can now view the history table to track the evolution of the quality score of a given asset.

- When using Replay to run DQ Jobs over a defined historical period, for example 5 days in the past, the metrics from each backrun DQ Job is included in the DQ History table and the quality calculation.

- After integrating a dataset from Data Quality & Observability Classic and Collibra Platform, you can now see the number of passing and failing rows for a given rule on the Data Quality Rule asset page.

- The JSON of a dataset integration between Data Quality & Observability Classic and Collibra Platform now shows the number of passing and breaking records.

Important You need a minimum of Collibra Platform 2024.10 and Data Quality & Observability Classic 2024.07.

Jobs

- You can now edit the schedule details of DQ Jobs from the Jobs Schedule page.

- A banner now appears when a data type is not supported.

Rules

- Data Class Rules now have a maximum character length of 64 characters for the Name option and 256 for the Description.

- Email Data Classes where the email contains a single character domain name now pass validation. For example, [email protected]

- The Rule Definitions page now has a Break Record Preview Available column to make it easier to see when a rule is eligible for previewing break records.

- You can now use the search field to search for Rule Descriptions on the Rule Definitions page.

Alerts

- You can now toggle individual alerts from the Active column on the Alert Builder page to improve control over when you want alerts to send. This can prevent unnecessary alerts from being sent during certain occasions, such as setup and debugging.

Dataset Manager

- The Dataset Manager table now contains a searchable and sortable column called Connection Name to help identify your datasets more easily.

- We aligned the roles and permissions requirements for the Dataset Manager API.

- PUT /v2/updatecatalogobj requires its users to have ROLE_ADMIN, ROLE_DATA_GOVERNANCE_MANAGER, or ROLE_DATASET_ACTIONS.

- PUT /v2/updatecatalog requires its users to be the dataset owner or have ROLE_ADMIN or ROLE_DATASET_ACTIONS.

- DELETE /v2/deletedataset requires its users to be the dataset owner or have ROLE_ADMIN or ROLE_DATASET_MANAGER.

- PATCH /v2/renameDataset requires its users to be the owner of the source dataset or have ROLE_ADMIN, ROLE_DATASET_MANAGER, or ROLE_DATASET_ACTIONS.

- POST /v2/update-run-mode requires its users to have ROLE_DATASET_TRAIN, ROLE_DATASET_ACTIONS, or dataset access.

- POST /v2/update-catalog-data-category requires its users to have ROLE_DATASET_TRAIN, ROLE_DATASET_ACTIONS, or dataset access.

- PUT /v2/business-unit-to-dataset requires its users to have ROLE_DATASET_ACTIONS or dataset access.

- POST /v2/business-unit-to-dataset requires its users to have ROLE_DATASET_ACTIONS or dataset access.

- POST /dgc/integrations/trigger/integration requires its users to have ROLE_ADMIN, ROLE_DATASET_MANAGER, or ROLE_DATASET_ACTIONS.

- POST /v2/postjobschedule requires its users to have ROLE_OWL_CHECK and either ROLE_DATASET_ACTIONS or dataset access.

Connections

- The authentication dropdown menu for any given connection now displays only its supported authentication types.

- We've upgraded the following drivers to the versions listed:

- Db2 4.27.25

- Snowflake 3.19.0

- SQL Server 12.6.4 (Java 11 only)

Note

If you use additional encryption algorithms for JWT authentication, you must set one of the following parameters during your deployment of Data Quality & Observability Classic, depending on your deployment type:

Helm-based deployments

Set the following parameter in the Helm Chart:--set global.web.extraJvmOptions="-Dnet.snowflake.jdbc.enableBouncyCastle=true"

Standalone deployments

Set the following environment variable in the owl-env.sh:-export EXTRA_JVM_OPTIONS=”-Dnet.snowflake.jdbc.enableBouncyCastle=true"Note

While the Java 8 version is not officially supported, you can replace the SQL Server driver in the /opt/owl/drivers/mssql folder with the Java 8 version of the supported driver. You can find Java 8 versions of the supported SQL Server on the Maven Repository.

Admin Console

- A new access control layer, Require DATASET_ACTIONS role for dataset management actions, is available from the Security Settings page. When enabled, a new out of the box role, ROLE_DATASET_ACTIONS, is required to allow its users to edit, rename, publish, assign data categories and business units, and enable integrations from the Dataset Manager.

- A new out of the box role, ROLE_ADMIN_VIEWER, allows users who are assigned to it to access the following Admin Console pages, but restricts access to all others:

- Actions

- All Audit Trail subpages

- Dashboard

- Inventory

- Schedule Restrictions

- Usage

Note Users with ROLE_ADMIN_VIEWER cannot access the pages to which the quick access buttons on the Dashboards page are linked.

Note Users with ROLE_ADMIN_VIEWER cannot add or delete schedule restrictions.

- You can now set both size- and time-based data purges from the Data Retention Policy page of the Admin Console. Previously, you could only set size-based data retention policies.

- The Data Retention Policy now supports auto-clean in both single- and multi-tenant environments.

APIs

- We’ve made several changes to the API documentation. First, we aligned the role checks between the Product APIs (V3 endpoints) and the Data Quality & Observability Classic UI. We’ve also enhanced the documentation in Swagger to include more detailed descriptions of endpoints. Lastly, we reproduced the Swagger documentation of the Product API in the Collibra Developer Portal to ensure a more unified user experience with the broader Collibra platform and allow for easier scalability of API documentation in the future.

Fixes

Integration

- The Overview - History section of Data Quality Job assets now displays the correct month of historical data when an integration job run occurs near the end of a given month.

Jobs

- DQ Jobs on SAP HANA connections with SQL referencing table names containing semicolons ; now run successfully when you escape the table name with quotation marks " ". For example, the SQL query

select * from TEST."SPECIAL_CHAR_TABLE::$/-;@#%^&*?!{}~\+="now runs successfully. - You can now run DQ Jobs that use Amazon S3 as the secondary dataset with Instance Profile authentication.

Rules

- Native rules on DQ Jobs created on connections authenticated by password manager now run successfully and return all related break records when their conditions are met.

Alerts

- The Assignee column is no longer included in the alert email for Rule Status and Condition alerts with rule details enabled.

APIs

- When using the POST /v2/controller-db-export call on a dataset with an alert condition, then using the POST /v2/controller-db-import call, now returns a successful 200 response instead of an unexpected JSON parsing error.

Latest UI

- When running DQ Jobs on NFS connections, data files with the date format ${yyyy}${MM}${dd} within their file name are now supported.

- Native Rules now display the variable name of parameters such as @runId and @dataset with the actual value in the Condition column of the Rules tab on the Findings page.

- The Jobs Schedule page now shows the time zone offset (+ or - a number of hours) in the Last Updated column. Additionally, the TimeZone column is now directly to the right of the Scheduled Time column to improve its visibility.

- You can now sort columns on the Job Schedule page.

- The Agent Configuration, Role Management - Connections, Business Units, Inventory pages of the Admin Console now have fixed column headers and the Actions button and horizontal scrollbar are now visible at all times.

- After adding or deleting rules, the rule count on the metadata bar now reflect any updates.

Features in preview

- The Rule Workbench now contains an additional query input field called “Filter,” which allows you to narrow the scope of your rule query so that only the rows you specify are considered when calculating the rule score. A filter query not only helps to provide a better representation of your quality score but improves the relevance of your rule results, saving both time and operational costs by reducing the need to create multiple datasets for each filter.

Important This feature is currently available as a public preview option. For more information about features in preview, see Previews at Collibra.

Known limitations

- In the this release, table names with spaces are not supported because of dataset name validation during the creation of a dataset. This will be addressed in an upcoming release.

DQ Security

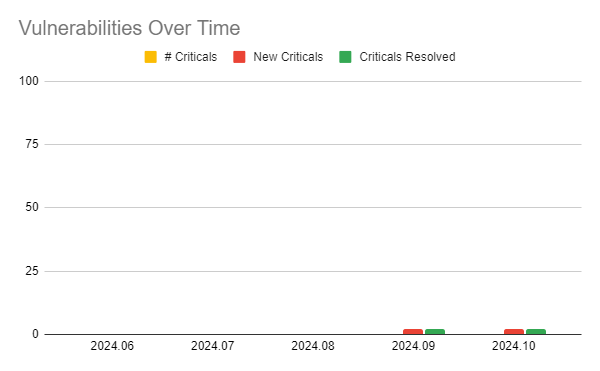

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

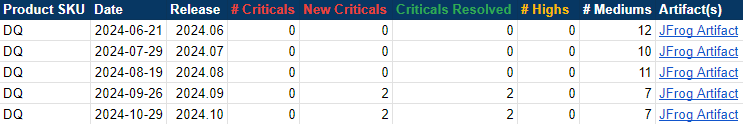

The following image shows a table of Collibra DQ security metrics arranged by release version.