Release 2024.07

Release Information

- Release date of Data Quality & Observability Classic 2024.07: July 30, 2024

- Publication dates:

- Release notes: June 24, 2024

- Documentation Center: July 4, 2024

Highlights

Important

As of this release, the classic UI is no longer available.

Important

To improve the security of Data Quality & Observability Classic, we removed the default keystore password from the installation packages in this release. In general, if your organization uses a SAML or SSL keystore, we recommend that you provide a custom keystore file. However, if your organization has a Standalone installation of Data Quality & Observability Classic and plans to continue using the default keystore, please contact Support to receive the default password to allow you to install and successfully use Data Quality & Observability Classic versions 2024.07 and newer. If your organization has a containerized (Cloud Native) installation of Data Quality & Observability Classic, you can continue to leverage the default keystore file, as the latest Helm Charts have the default password set within the values.yaml file.

- Integration

- You can now select the data quality layers that will have corresponding assets created in Collibra Platform either automatically upon a successful integration or only when a layer contains breaking records. By selecting individual layers instead of including all of them by default, this can help prevent an overwhelming number of assets from being created automatically.

Admins can configure this on the Integration Setup wizard of the Integrations page in the Admin Console. - Admins can also map file and database views from Data Quality & Observability Classic to corresponding assets in Collibra Platform Catalog. This allows for out-of-the-box relations to be created between their file- or view-based Data Quality & Observability Classic datasets and the file table and database view assets (and their columns) in Collibra Platform.

Note Collibra Platform 2024.07 or newer is required for view support.

- Pushdown

- We're delighted to announce that Pushdown for SQL Server is now generally available!

Pushdown is an alternative compute method for running DQ Jobs, where Data Quality & Observability Classic submits all of the job's processing directly to a SQL data warehouse. When all of your data resides in the SQL data warehouse, Pushdown reduces the amount of data transfer, eliminates egress latency, and removes the Spark compute requirements of a DQ Job.

Enhancements

Explorer

- From the Sizing step in Explorer, you can now change the agent of a Pullup job by clicking the Agent Details field and selecting from the available agents listed in the Agent Status modal.

- You can now use custom delimiters for remote files in the latest UI.

- When using the Mapping layer for remote file connections, you can now add

-srcmultilineto the command line to remove empty rows from the source to target analysis. - We added a Schema tab to the Dataset Overview to display table schema details after clicking Run in the query box. (ticket #145266)

- When the number of rows in a dataset exceeds 999, you can now hover your cursor over the abbreviated number to view the precise number of rows. (ticket #125753)

Rules

- Break record previews for out-of-the-box Data Type rules now display under the Rules tab on the Findings page when present. (idea #DCC-I-2155)

- When a dataset is renamed from the Dataset Manager, any explicit references to the dataset name for all primary and secondary rules are updated. (idea #DCC-I-2624)

- You can now rename rules from the Actions dropdown menu on the Dataset Rules page. Updated rule names cannot match the name of an existing rule and must contain only alphanumeric characters without spaces.

Alerts

- We added a new Rule Status alert to allow you to track whether your rule condition is breaking, throwing an exception, or passing.

- When configuring Condition-type alerts, you now have the option to Add Rule Details, which includes the following details in the email alert (idea #DCC-I-732):

- Rule name

- Rule condition

- Total number of points to deduct from the quality score when breaking

- Percentage of records that are breaking

- Number of breaking records

- Assignment Status

- Admins can now set a custom alert email signature from the Alerts page in the Admin Console. (idea #DCC-I-2400)

Profile

- Completeness percentage is now listed on the dataset Profile page in the latest UI.

Findings

- We added a Passing Records column to the rule break table under the Rules tab on the Findings page to show the number of records that passed a rule. This enhancement simplifies the calculation of the total number of records, both passing and breaking. Additionally, we renamed the Records column, Breaking Records. (idea #DCC-I-2223)

- You can now download CSV files and copy signed links of rule break records on secure S3 connections, including NetApp, MinIO, and Amazon S3.

Scorecards

- To improve page navigation, we added a dedicated Add Page button to the top right corner of the Scorecards page and moved the search field from the bottom of the scorecards dropdown menu to the top.

Reports

- When Dataset Security is turned on, the only datasets and their associated data that display on the Dataset Dimension and Column Dimension dashboards are the ones to which users have explicit access. This is only applicable when Dataset Security is enabled by an admin in Security Settings.

Jobs

- When exporting job logs, the names of the export files of job logs exported in bulk from the Jobs page begin with a timestamp representing when the file was downloaded. Additionally, the names of the export files of job logs of individual jobs begin with a timestamp representing the UpdateTimestamp and includes the first 25 characters of the dataset name.

Dataset Manager

- Admins can now update dataset hosts in bulk by selecting Bulk Manage Host from the Bulk Actions dropdown menu and specifying a new host URL.

Integration

- When datasets do not have an active Collibra Platform integration, you can now enable dataset integrations in bulk from the Dataset Manager page by clicking the checkbox option next to the datasets you wish to integrate, then selecting Bulk Enable Integration from the Bulk Actions dropdown menu.

- Additionally, when datasets have an active integration, you can now submit multiple jobs to run by selecting Bulk Submit Integration Jobs from the Bulk Actions dropdown menu.

- We improved the score calculation logic of Data Quality Rule assets.

- When viewing Rule assets and assets of data quality layers in Collibra Platform, the Rule Status now displays either Passing, Breaking, Learning, or Suppressed. Previously, rules and data quality layers without any breaks displayed an Active status, but that is now listed as Passing. (ticket #137526)

Pushdown

- You can now scan for exact match duplicates in BigQuery Pushdown jobs.

- You can now scan for shapes and exact match duplicates in SAP HANA Pushdown jobs. Additionally, we’ve added the ability to archive duplicates and rules break records to the source SAP HANA database to allow you to easily identify and take action on data that requires remediation.

Note An enhancement to enable scanning for fuzzy match duplicates in BigQuery Pushdown jobs is planned for Data Quality & Observability Classic 2024.10.

SQL Assistant for Data Quality

- We added AI_PLATFORM_PATH to the Application Configuration Settings to allow Data Quality & Observability Classic users who do not have a Collibra Platform integration to bypass the integration path when this flag is set to FALSE.

- When set to TRUE (default), code will hit the integration or public proxy layer endpoint.

- When set to FALSE, code will bypass the integration path.

Identity Management

- We removed the Add Mapping button from the AD Security Settings page in the Admin Console.

- When dataset security is enabled and a dataset does not have a previous successful job run, users without explicit access to it will not see it when they use the global search to look it up.

APIs

- You must have ROLE_ADMIN to update the host of one or many datasets using the PATCH /v3/datasetDefs/batch/host call. Any updates to the hosts of datasets are logged in the Dataset Audit Trail.

- By using the Dataset Definitions API, admins can now manage the following in bulk:

- Agent

- PATCH /v3/datasetDefs/batch/agent

- Host

- PATCH /v3/datasetDefs/batch/host

- Spark settings

- PATCH /v3/datasetDefs/batch/spark

- Agent

Platform

- To ensure security compliance for Data Quality & Observability Classic deployments on Azure Kubernetes Service (AKS), we now support the ability to pass sensitive PostgreSQL Metastore credentials to the Helm application through the Kubernetes secret,

--set global.configMap.data.metastore_secret_name. Further, you can also pass sensitive PostgreSQL Metastore credentials to the Helm application through the Azure Key Vault secret provider class object,--set global.vault.enabled=true --set global.vault.provider=akv --set global.vault.metastore_secret_name.

Fixes

Connections

- You can now create a Snowflake JDBC connection with a connection URL containing either a double quote or curly bracket, or a URL encoded version of the same, for example, {"tenant":"foo","product":"bar","application":"baz"}. (ticket #148348)

Findings

- We fixed an issue which prevented sorting in the Records column for datasets with multiple rule outputs and different row count values. (ticket #142362)

- We resolved a misalignment of Outlier column values in the latest UI. (ticket #146163)

Reports

- The Column Dimension and Dataset Dimension reports now display an error message when users who do not have ROLE_ADMIN attempt to access them. (ticket #141397)

Integration

- We improved the error handling when retrieving RunId for datasets. (ticket #145040, 146724, 148232)

Latest UI

- When a user does not have any assigned roles, an empty chip no longer displays in the Roles column on the User Management page in the Admin Console.

- We removed the Enabled and Locked columns from the AD Mapping page in the Admin Console, because they do not apply to AD users.

- A warning message now appears when you attempt to load a temp file and the Temp File Upload option is disabled on the Security Settings page in the Admin Console.

- You can now sort the Records column of the Rules tab on the Findings page.

- Outlier findings now display in the correct column.

- The section of the application containing Scorecards, List View, Assignments, and Pulse View is now called Views.

- The links on the metadata bar now appear in a new order to better reflect the progression of how and when they are used.

- You can now change the agent of a dataset during the edit and clone process.

- If schemas or tables fail to load in Explorer, an error message now appears.

DQ Security

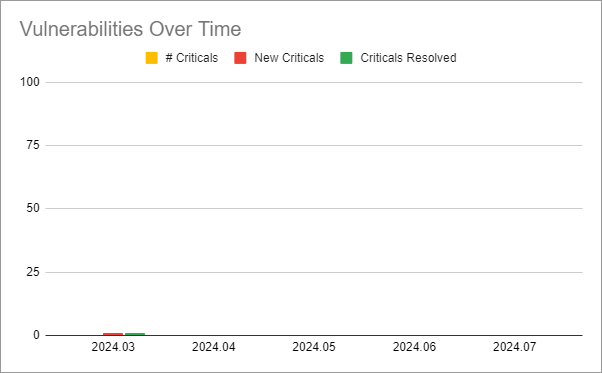

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

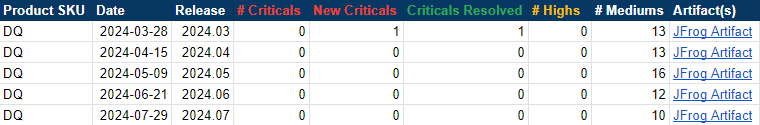

The following image shows a table of Collibra DQ security metrics arranged by release version.