Release 2024.02

Release Information

- Release date of Data Quality & Observability Classic 2024.02: February 26, 2024

- Publication dates:

- Release notes: January 22, 2024

- Documentation Center: February 4, 2024

Highlights

- Archive Break Records

Pullup

When rule breaks are stored in the PostgreSQL Metastore with link IDs assigned, you can now download a CSV file containing the details of the rule breaks and link ID columns via the Findings page

Pushdown

In order to completely remove sensitive data from the PostgreSQL Metastore, you can now enable Data Preview from Source in the Archive Break Records section of the Explorer Settings. When you enable Data Preview from Source, data preview records do not store in the PostgreSQL Metastore.

Previews of break records associated with Rules, Outliers, Dupes, and Shapes breaks on the Findings page reflect the current state of the records as they appear in your data source. With this option disabled, the preview records that display in the web app are snapshots of the PostgreSQL Metastore records at runtime. This option is disabled by default.

Additionally, with Archive Break Records enabled and a link ID column assigned, you can now download a CSV or JSON file containing the details of the breaks and link ID columns via the Findings page

Lastly, when Archive Break Records is enabled, you can now optionally enter an alternative dataset-level schema name to store source break records, instead of the schema provided in the connection.

Important

Changes for Kubernetes Deployments

As of Collibra DQ version 2023.11, we've updated the Helm Chart name from owldq to dq. For Helm-based upgrades, point to the new Helm Chart while maintaining the same release name. Please update your Helm install command by referring to the renamed parameters in the values.yaml file. It is also important to note that the pull secret has changed from owldq-pull-secret to dq-pull-secret.

Further, following deployment, your existing remote agent name will change. For example, if your agent name is owldq-owl-agent-collibra-dq, the new agent name will be dq-agent-collibra-dq. If your organization uses APIs for development, ensure that you upgrade AGENT name configurations in your environments.

Lastly, when you deploy using the new Helm Charts, new service (Ingress/Load Balancer) names are created. This changes the IP address of the service and requires you to reconfigure your Load Balancer with the new IP.

Please see the expandable sections below for more details about specific changes.

| Old Key | Renamed Key |

|---|---|

| global.version.owl | global.version.dq |

| global.image.owlweb | global.image.web |

| global.image.owlagent | global.image.agent |

| Parameter | Old Default Value | New Default Value |

|---|---|---|

| global.mainChart | owldq | dq |

| global.image.pullSecret.name | owldq-pull-secret | dq-pull-secret |

| global.web.key.secretName | owldq-ssl-secret | dq-ssl-secret |

| global.image.web.name | owl-web | dq-web |

| global.image.agent.name | owl-agent | dq-agent |

| global.image.livy.name | owl-livy | dq-livy |

| global.image.spark.name | spark | dq-spark |

Note

If your organization has a standalone deployment of Collibra DQ with SSL enabled for DQ Web, and both DQ Web and DQ Agent are on the same VM or server, we recommend upgrading directly to Collibra DQ 2023.11.3 patch version instead of 2023.11. For more information, see the Maintenance Updates section below.

Migration Updates

Important This section only applies if you are upgrading from a version older than Collibra DQ 2023.09 on Spark Standalone. If you have already followed these steps during a previous upgrade, you do not have to do this again.

We have migrated our code to a new repository for improved internal procedures and security. Because owl-env.sh jar files are now prepended with dq-* instead of owl-*, if you have automation procedures in place to upgrade Collibra DQ versions, you can use the RegEx replace regex=r"owl-.*-202.*-SPARK.*\.jar|dq-.*-202.*-SPARK.*\.jar" to update the jars.

Additionally, please note the following:

- Standalone Upgrade Steps When upgrading from a Collibra DQ version before 2023.09 to a Collibra DQ version 2023.09 or later on Spark Standalone, the upgrade steps have changed.

- Open a terminal session.

- Move the old jars from the owl/bin folder with the following commands.

- Copy the new jars into the owl/bin folder from the extracted package.

- Copy the latest

owlcheckandowlmanage.shto /opt/owl/bin directory. - Start the Collibra DQ Web application.

- Start the Collibra DQ Agent.

- Validate the number of active services.

mv owl-webapp-<oldversion>-<spark301>.jar /tmp

mv owl-agent-<oldversion>-<spark301>.jar /tmp

mv owl-core-<oldversion>-<spark301>.jar /tmpmv dq-webapp-<newversion>-<spark301>.jar /home/owldq/owl/bin

mv dq-agent-<newversion>-<spark301>.jar /home/owldq/owl/bin

mv dq-core-<newversion>-<spark301>.jar /home/owldq/owl/binTip You may also need to run chmod +x owlcheck owlmanage.sh to add execute permission to owlcheck and owlmanage.sh.

./owlmanage.sh start=owlweb./owlmanage.sh start=owlagentps -ef | grep owlEnhancements

Capabilities

- When using the Dataset Overview, you can now click the -q button to load the contents of the dataset source query into the SQL editor.

- When using the Dataset Overview, you can now use Find and Replace to find any string in the SQL editor and replace it with another.

- When a finding is assigned to a ServiceNow incident and the ServiceNow connection has Publish Only enabled on the ServiceNow Configuration modal in the Admin screens, this finding record is pushed to ServiceNow as it was in previous versions, but the status is no longer linked. This means that you can adjust the statuses on the ServiceNow Incident as you wish and the finding in DQ. Whereas before, the ServiceNow Incident had to be closed in order for the DQ finding to be resolved.

- From the Settings page in Explorer, you can now select the Core Fetch Mode option to allow SQL queries with spaces to run successfully. When selected, this option adds

-corefetchmodeto the command line to enable the core to fetch the query from the load options table and override the-q. - When attempting to connect to NetApp or Amazon S3 endpoints in URI format with the HTTPS option selected, you can now add the following properties to the Properties tab on Amazon S3 connection templates to successfully create connections:

- For Amazon S3 endpoint URI: s3-endpoint=s3

- For NetApp: s3-endpoint=netapp

- When using the Pulse View, you can now select a few new options from the Show Failed dropdown menu, including Failed Job Runs and Failing Scores. Previously, the Show Failed option only displayed job runs that previously failed.

- You can now use uppercasing in secondary datasets and rule references.

- You can now configure arbitrary users as part of the root user group for DQ pod deployment.

- Due to security concerns, we have removed the license key from the job logs.

Platform

- We've upgraded the following drivers to their latest versions:

- You can now enable multi-tenancy for a notebook API.

- We now apply the same Spark CVE fixes that are applied to Cloud Native deployments of Collibra DQ to Standalone deployments.

| Driver | Version |

|---|---|

| Databricks | 2.6.36 |

| Google BigQuery |

1.5.2.1005 |

| Dremio | 24.3.0 |

| Snowflake | 3.14.4 |

Pushdown

- From the Settings page on Explorer, you can now select Date or DateTime (TimeStamp) from the Date Format dropdown menu to substitute the runDate and runDateEnd at runtime.

- To conserve memory and processing resources, the results query now rolls up outliers and shapes, and the link IDs no longer persist to the Metastore.

- All rules from the legacy Rule Library function correctly for Snowflake and Databricks Pushdown except for Having_Count_Greater_Than_One and Two_Decimal_Places when Link ID is enabled. See the Known Limitations section below for more information.

- You can now use cross-dataset rules that traverse across connections on the same data source.

Features in preview

Collibra AI

- SQL Assistant for Data Quality (in preview) now allows you to select between four new options to generate prompts for:

- Categorical: Writes a SQL query to detect categorical outliers.

- Dupe: Writes a SQL query to detect duplicate values.

- Record: Writes a SQL query to find values that appear on a previous day but not for the next day.

- Pattern: Writes a SQL query to find infrequent combinations that appear less than 5 percent of the time in the columns you specify.

DQ Integration

- The new Quality tab is now available as part of the latest UI updates for Asset pages in Collibra Platform for private preview participants, giving you at-a-glance insights into the quality of your assets. These insights include:

- Score and dimension roll-ups.

- Column, data quality rule, data quality metric, and row overviews.

- Details about the data elements of an asset.

- You can now see the Overview DQ Score on an Asset when searching via Data Marketplace. This improves your ability to browse the data quality scores of Assets without opening their Asset Pages.

Pushdown

- Pushdown processing for SQL Server is now available for preview testing.

Fixes

Capabilities

- While editing the command line of a job containing an outlier by replacing

-by HOURwith-tbin HOUR, the command line no longer reverts to its original state after profiling completes. (ticket #126764) - When exporting the job log details to CSV, Excel, PDF, or Print from the Jobs page, the exported data now contains all rows of data. (ticket #129832)

- Additionally, when exporting the job log details to PDF from the Jobs page, the PDF file now contains the correct column headers and data. (ticket #129832)

- When working with the Alert Builder, you no longer see a “No Email Servers Configured” message despite having correctly configured SMTP settings. (ticket #127520)

DQ Integration

- When integrating data from an Athena connection, you can now use the dropdown menu in rules to map an individual column to a Rule in Collibra Platform. (ticket #125152, 126150)

Pushdown

- When archive breaking records is enabled, statements containing backticks

`or new lines are properly inserted into the source system. (ticket #130122) - Snowflake Pushdown jobs with many outlier records either dropped or added, new limits to memory usage now prevent out-of-memory issues. (ticket #126284)

Known Limitations

- When Link ID is enabled for a Snowflake or Databricks Pushdown job, Having_Count_Greater_Than_One and Two_Decimal_Places do not function properly.

- The workaround for Having_Count_Greater_Than_One is to manually add the Link ID to the group by clause in the rule query.

- The workaround for Two_Decimal_Places is to add a

*to the inner query.

DQ Security

Note If your current Spark version is 3.2.2 or older, we recommend upgrading to Spark 3.4.1 to address various critical vulnerabilities present in the Spark core library, including Log4j.

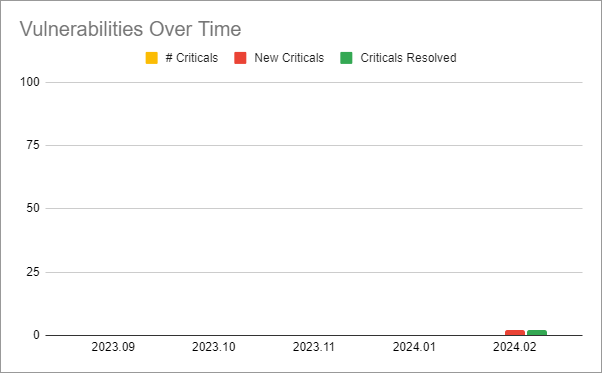

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

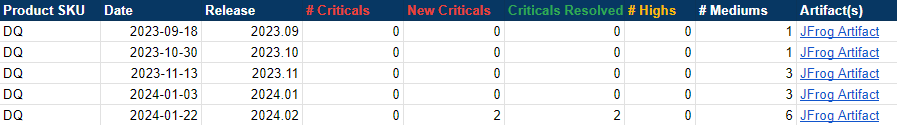

The following image shows a table of Collibra DQ security metrics arranged by release version.