Connecting to Databricks

This section contains details for Databricks connections.

Select an option from the drop-down list to display information for a particular driver class.

General information

| Field | Description |

|---|---|

| Data source | Databricks |

| Supported versions |

|

| Connection string | jdbc:databricks://jdbc:cdata:Databricks:

|

| Packaged? |

|

| Certified? |

|

| Supported features | |

|

Estimate job

|

|

|

Analyze data

|

|

|

Schedule

|

|

| Processing capabilities | |

|

Pushdown

|

|

|

Spark agent

|

|

|

Yarn agent

|

|

|

Parallel JDBC

|

|

| Java Platform version compatibility | |

|

JDK 8

|

|

|

JDK 11

|

|

Minimum user permissions

In order to bring your Databricks data into Data Quality & Observability Classic, you need the following permissions.

- Read access on your Unity Catalog.

- ROLE_ADMIN assigned to your user in Collibra DQ.

- Access Control List (ACL) permissions to connect to these abilities:

- Attach notebook to compute

- View Spark UI

- View compute metrics

Recommended and required connection properties

Use the drop-down menu to select a driver class.

| Required | Connection Property | Type | Value |

|---|---|---|---|

|

|

Name | Text | The unique name of your connection. Ensure that there are no spaces in your connection name. |

|

|

Connection URL | String |

The connection string path of your Databricks connection. Example Use the following format when connecting to Databricks SQL Warehouse: jdbc:databricks://[Host]:[Port]/[Schema];[Property1]=[Value]; [Property2]=[Value];... When referring to the example below, replace the Example

Use the following format when connecting to Databricks Unity Catalog: jdbc:databricks://[Host]:[Port]/[Schema];[Property1]=[Value]; [Property2]=[Value];...ConnCatalog=catalog_name When referring to the example below, replace the Example

Specify When referring to the example below, replace the Note

|

|

|

Driver Name | String |

The driver class name of your Databricks connection.

|

|

|

Port | Integer |

The port number to establish a connection to the datasource. The default port is |

|

|

Limit Schemas | Option |

Allows you to manage usage and restrict visibility to only the necessary schemas in the Explorer tree. See Limiting schemas to learn how to limit schemas from the Connection Management page. Note When you include a restricted schema in the query of a DQ Job, the query scope may be overwritten when the job runs. While only the schemas you selected when you set up the connection are shown in the Explorer menu, users are not restricted from running SQL queries on any schema from the data source. |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option | The Agent that submits your Spark job for processing. |

|

|

Auth Type | Option |

The method to authenticate your connection. Note The configuration requirements are different depending on the Auth Type you select. See Authentication for more details on available authentication types. |

|

|

Properties | String |

The configurable driver properties for your connection. Multiple properties must be semicolon delimited. For example, abc=123;test=true |

|

|

Variables | String |

When you specify Variable Name is set to Value is the password. |

|

|

Archive Break Records | Option |

Allows you to write break records directly to a Databricks database or schema. Important Ensure that you have the necessary privileges to write break records to Databricks. |

Authentication

Select an authentication type from the dropdown menu. The options available in the dropdown menu are the currently supported authentication types for this data source.

| Required | Field | Description |

|---|---|---|

|

|

Username |

The username of your Databricks service account. Set Username to |

|

|

Password |

The password of your Databricks service account. Enter the token value that you entered in the Connection URL. Note To successfully establish a Databricks connection, verify that your token is valid, because they generally expire in 90 days. |

|

|

Script |

The file path containing the script file that the password manager uses to interact with and authenticate a user account. Example /tmp/keytab/databricks_pwd_mgr.sh |

|

|

Param $1 | Optional. An additional parameter to authenticate your Databricks connection. |

|

|

Param $2 | Optional. An additional parameter to authenticate your Databricks connection. |

|

|

Param $3 | Optional. An additional parameter to authenticate your Databricks connection. |

|

|

Tenant ID |

The tenant ID of your Microsoft account. |

|

|

Client ID |

The client ID of your Microsoft account. |

|

|

Client Secret | The client secret of your Microsoft account. |

JDBC Driver Jar

if necessary, you can download the Databricks JDBC zip file from one of the following links.

- For the latest Databricks driver version, refer to the official Databricks JDBC Driver page.

- For archived Databricks driver versions, refer to the official Databricks JDBC Driver archive.

Note Databricks JDBC driver version 2.6.27 is packaged as part of both standalone and Kubernetes download packages.

Notebook (Supported)

- Pyspark SDK

- Scala SDK

- Example

Databricks no longer supports Runtime 6.5 or 10.3. Therefore, Collibra DQ Profile 2.45 is not runnable on Databricks.

https://docs.databricks.com/release-notes/runtime/10.3ml.html

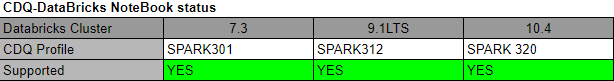

The following table shows the latest supported versions of Collibra DQ Profiles and their matching Databricks Runtimes.

Spark Submit (Not Supported)

- Spark Master URL

- Databricks Jobs API

- Rest

- UI

- DQ-Databricks Submit

Note While these are not officially supported, there is a reference to architecture and implementation pattern for how to do a Databricks Job submission.

Limitations

- When Archive Break Records is enabled for Azure Databricks Pushdown connections authenticated over EntraID, the data preview does not display column names correctly and shows 0 columns in the metadata bar. Therefore, Archive Break Records is not supported for Azure Databricks Pushdown connections that use EntraID authentication.

- UTF-8 character encoding for Databricks JDBC connections functions as expected in standalone deployments of Data Quality & Observability Classic versions older than 2024.06. As a workaround in versions older than 2024.06, follow the instructions in the Collibra Support Portal.