Pushdown processing is currently available for the data sources listed within the tabbed section below. Click a tab to see the Pushdown setup information for an available data source.

This section shows you how to set up Pushdown for a Databricks connection.

Requirements

In Collibra DQ

You have:

- Admin permissions to set up the Databricks connection template and enable Pushdown.

- Databricks JDBC driver version 2.6.32+ for Standalone deployments of Collibra DQ.

Note You do not need to install the Databricks JDBC driver for Installing Data Quality & Observability Classic on self-hosted Kubernetes deployments of Collibra DQ because it is already included in the installation package.

In Databricks

You have:

- A personal access token to establish a connection between Collibra DQ and Databricks.

- A Databricks workspace admin SQL warehouse that is set up in your Databricks workspace.

- Read access to the tables on which you plan to run DQ scans.

- A Databricks workspace admin can enable the Can Read permission in the Permission Settings section of your Databricks workspace.

Note Your SQL warehouse can use either the Hive Metastore or the Delta Lake Unity Catalog for metadata management. Note that when you run Pushdown jobs on tables within the Hive Metastore without adding Unity Catalog to the connection string, Explorer displays all tables available in your Databricks workspace. However, only the tables that are viewable from Databricks under the Hive Metastore can be accessed to run Pushdown jobs on them. We recommend adding a Unity Catalog to your connection URL to run Pushdown jobs on all tables within your Databricks workspace.

Configuring Pushdown for Databricks

The following steps show you how to set up the Databricks connection template with Pushdown enabled.

Setting up the Databricks connection template

The following steps show you how to set up the Databricks connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the Databricks tile, then enter the required information.

- Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your Databricks connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:databricks://<account>.cloud.databricks.com/?db=DATABRICKS_SAMPLE_DATA;ConnCatalog=<catalog-name>Important |

|

|

Port | Integer | The port number to establish a connection to the datasource. The default port is 0. |

|

|

Driver Name | String | The driver class name used for your connection, for example, com.databricks.client.jdbc.Driver |

|

|

Source Name | String | Not applicable. |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown Jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for Databricks connections, go to the Databricks documentation. |

|

|

Pushdown | Option |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your Databricks connection. Important You must select this option for Pushdown to function. |

|

|

Archive Break Records | Option | Allows you to write break records directly to a Databricks database or schema. |

|

|

Archive Break Records Location | String | The break records archive location within Databricks. If you do not specify a location, then Collibra DQ uses PUBLIC by default. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. |

|

|

Driver Properties | String |

Optional. The configurable driver properties for your connection. |

You can now create a Pushdown job on your Databricks connection.

This section shows you how to set up Pushdown for a Snowflake connection.

Running Snowflake setup scripts

| Step | Details |

|---|---|

| 1 | Open a Snowflake worksheet. |

| 2 |

Update the following session variables: Copy

Note |

| 3 |

Run as an admin user. Copy

Note If the usage of ACCOUNTADMIN is restricted in your organization, you may use USERADMIN, SECURITYADMIN, and SYSADMIN instead. |

| 4 |

Create a user and role for Collibra DQ. Copy

Note Customers may manage users and roles according to their own policies and procedures. |

| 5 |

Create a virtual warehouse to run Collibra DQ. The virtual warehouse is referenced in the connection URL to direct DQ traffic to it. Copy

Note Customers may manage warehouses according to their own policies and procedures. |

| 6 |

Assign privileges to the Collibra DQ warehouse. Copy

Note Customers may manage warehouses according to their own policies and procedures. |

| 7 |

Assign metadata access to your Collibra DQ role. Copy

Note Customers may manage databases and schemas according to their own policies and procedures. |

| 8 |

Update the session variable Copy

|

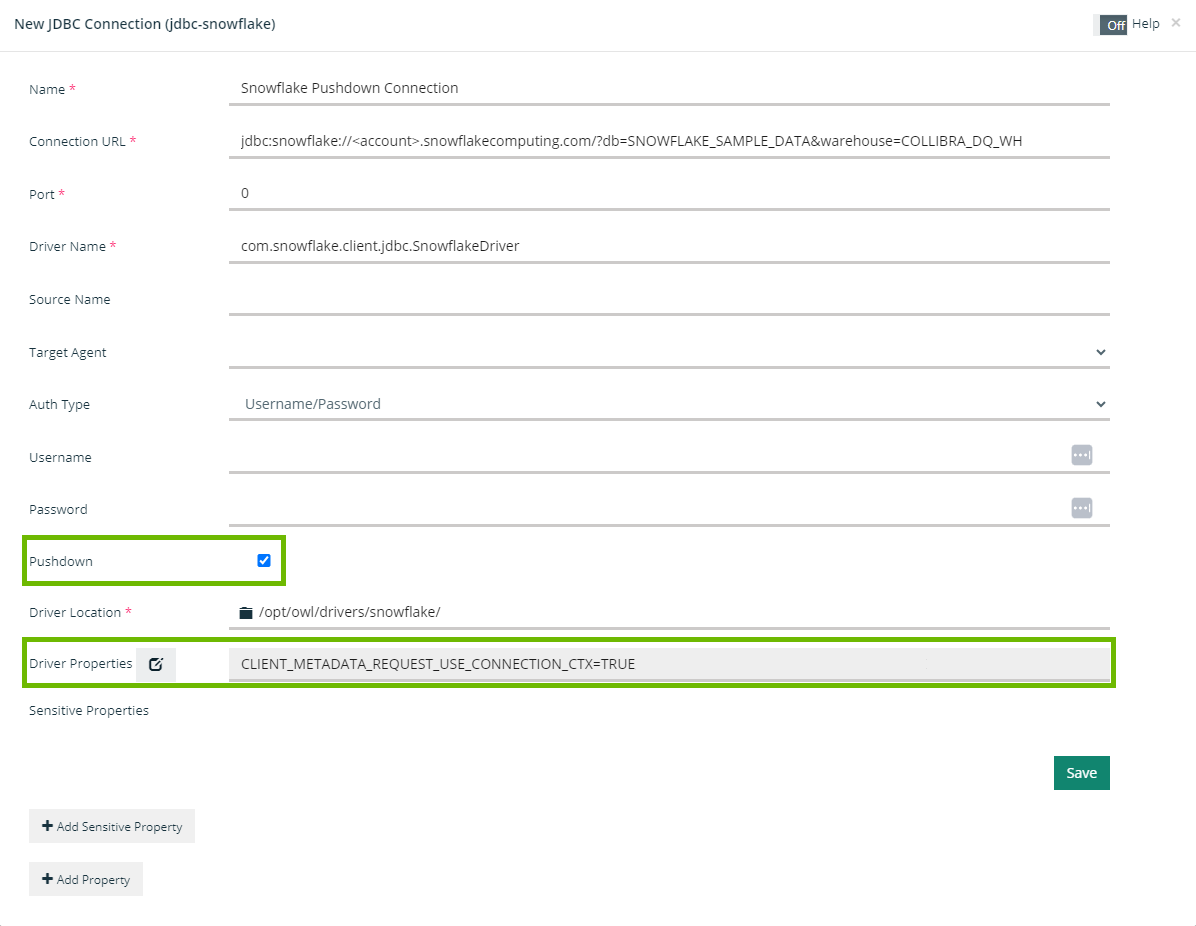

Setting up the Snowflake Connection template

The following steps show you how to set up the Snowflake connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the Snowflake tile, then enter the required information.

- Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | Text | The unique name of your Snowflake connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:snowflake://<account>.snowflakecomputing.com/?db=SNOWFLAKE_SAMPLE_DATA&warehouse=COLLIBRA_DQ_WH |

|

|

Port | Integer | The port number to establish a connection to the datasource. |

|

|

Driver Name | Text | The driver class name used for your connection, for example, com.snowflake.client.jdbc.SnowflakeDriver |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. You do not need to select an option because Pushdown Jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. See the Snowflake documentation for more information about authentication methods for Snowflake connections. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your Snowflake connection. Warning You must select this option for Pushdown to function. |

|

|

Archive Break Records | Option |

Allows you to write break records directly to a Snowflake database or schema. Important Ensure that you have the necessary privileges to write break records to Snowflake. |

|

|

Driver Location | File path | A file path which designates the name and location of the driver. |

|

|

Driver Properties | String |

The configurable driver properties for your connection. Add the following driver property: CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX=TRUE |

The following image shows an example of a Snowflake connection with Pushdown selected.

Known limitations

- There is a limitation where Snowflake Pushdown jobs with queries containing REGEXP_REPLACE fail because Snowflake does not currently support arguments with collation specifications. There are two known workarounds:

- Make a clone of the table that is synced in Snowflake and has no collation set.

- Use Pullup mode instead of Pushdown to avoid this Snowflake limitation.

You can now create a Pushdown job on your Snowflake connection.

To run a Snowflake Pushdown job, you need to opt in when setting up your Snowflake connection. Select the Pushdown option in the Connection template to turn on Pushdown capabilities.

This section shows you how to set up Pushdown for a BigQuery connection.

Requirements

In Collibra DQ

- You have admin permissions (

ROLE_ADMIN) to set up the BigQuery connection template and enable Pushdown. - You have BigQuery JDBC driver version 1.3.2.1003 for Standalone deployments of Collibra DQ.

Note You do not need to install the BigQuery JDBC driver for Installing Data Quality & Observability Classic on self-hosted Kubernetes deployments of Collibra DQ because it is already included in the installation package.

In BigQuery

- You have

Viewer(roles/viewer) permissions. - You have

Readeraccess to BigQuery dataset tables. - You have

Writeraccess to materialized view datasets.

Configuring Pushdown for BigQuery

The following steps show you how to set up the BigQuery connection template with Pushdown enabled.

Setting up the BigQuery connection template

The following steps show you how to set up the BigQuery connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the BigQuery tile, then enter the required information.

-

Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your BigQuery connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:bigquery://https://www.googleapis.com/bigquery/v2:443;OAuthType=0;ProjectId=owl-hadoop-cdh;OAuthServiceAcctEmail=<service-account-email>;TimeOut=3600 |

|

|

Port | Integer | The port number to establish a connection to the datasource. The default port is 443. |

|

|

Driver Name | String | The driver class name used for your connection, for example, com.simba.googlebigquery.jdbc42.DriverNote The |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for BigQuery connections, go to the official BigQuery documentation. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your BigQuery connection. Important You must select this option for Pushdown to function. |

|

|

Archive Break Records | Option |

Allows you to write break records directly to a BigQuery database or schema. |

|

|

Archive Break Records Location | String |

The break records archive location within BigQuery. If you do not specify a location, then Collibra DQ uses Note Archive Break Records Location is only available and required when the Archive Break Records option is selected. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. For example, /opt/owl/drivers/bigquery |

|

|

Driver Properties | String |

Optional. The configurable driver properties of your connection. |

Limitations

Rule validation for BigQuery Pushdown connections is not supported. However, you can manually execute SQL queries from the BigQuery console on GCP as a possible workaround.

You can now create a Pushdown job on your BigQuery connection.

This section shows you how to set up Pushdown for an Amazon Athena connection.

Requirements

In Collibra DQ

- You have admin permissions (

ROLE_ADMIN) to set up the Athena connection template and enable Pushdown. - You have Athena JDBC driver version 2.0.35.1000 for Standalone deployments of Collibra DQ.

Note You do not need to install the Athena JDBC driver for Installing Data Quality & Observability Classic on self-hosted Kubernetes deployments of Collibra DQ because it is already included in the installation package.

In Athena

- You have

Readaccess to your Glue Catalog and S3 query result bucket location. - You have

Writeaccess to your S3 query result bucket location. - You have

Readaccess to the Athena workgroup.

Configuring Pushdown for Athena

The following steps show you how to set up the Athena connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the Athena tile, then enter the required information.

-

Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your Athena connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:awsathena://AwsRegion=${region};S3OutputLocation=s3://{output_location};MetadataRetrievalMethod=Query |

|

|

Port | Integer | The port number to establish a connection to the datasource. The default port is 444. |

|

|

Driver Name | String | The driver class name of your connection, for example, com.simba.athena.jdbc.DriverNote The |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for Athena connections, go to the official Athena documentation. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your Athena connection. Important You must select this option for Pushdown to function. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. For example, /opt/owl/drivers/athena/ |

|

|

Driver Properties | String |

Optional. The configurable driver properties of your connection. |

You can now create a Pushdown job on your Athena connection.

Known limitations

- Only the AdaptiveRules layer is available from the Add Layers step.

This section shows you how to set up Pushdown for a Redshift connection.

Requirements

In Collibra DQ

- You have admin permissions (

ROLE_ADMIN) to set up the Redshift connection template and enable Pushdown. - You have Redshift JDBC driver version 2.1.0.9 for Standalone deployments of Collibra DQ.

Note You do not need to install the Redshift JDBC driver for Installing Data Quality & Observability Classic on self-hosted Kubernetes deployments of Collibra DQ because it is already included in the installation package.

In Redshift

- You have

READaccess on your Redshift database tables. - You have

USAGEaccess on your Redshift schema.

Configuring Pushdown for Redshift

The following steps show you how to set up the Redshift connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the Redshift tile, then enter the required information.

-

Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your Redshift connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:redshift://<host>:5439/dev |

|

|

Port | Integer | The port number to establish a connection to the datasource. The default port is 5439. |

|

|

Driver Name | String | The driver class name used for your connection, for example, com.amazon.redshift.jdbc42.Driver |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for Redshift connections, go to the official Redshift documentation. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your Redshift connection. Important You must select this option for Pushdown to function. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. For example, /opt/owl/drivers/redshift/ |

|

|

Driver Properties | String |

Optional. The configurable driver properties of your connection. |

You can now create a Pushdown job on your Redshift connection.

Known limitations

- Only the AdaptiveRules layer is available from the Add Layers step.

Requirements

In Collibra DQ

- You have admin permissions (

ROLE_ADMIN) to set up the Trino connection template and enable Pushdown. - You have Trino JDBC driver version 405.0 for Standalone deployments of Collibra DQ.

Note You do not need to install the Trino JDBC driver for Installing Data Quality & Observability Classic on self-hosted Kubernetes deployments of Collibra DQ because it is already included in the installation package.

In Trino

- You have Read access on your Trino database tables.

Configuring Pushdown for Trino

The following steps show you how to set up the Trino connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the Trino tile, then enter the required information.

-

Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your Trino connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:trino://${host}:443/<catalog>/<schema>&source=jdbc:presto |

|

|

Port | Integer | The port number to establish a connection to the datasource. The default port is 8080. |

|

|

Driver Name | String | The driver class name used for your connection, for example, io.trino.jdbc.TrinoDriver |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for Trino connections, go to the official Trino documentation. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your Trino connection. Important You must select this option for Pushdown to function. |

|

|

Archive Break Records | Option |

Allows you to write break records directly to a Trino database or schema. |

|

|

Archive Break Records Location | String |

The break records archive location within Trino. If you do not specify a location, then Collibra DQ uses Note Archive Break Records Location is only available and required when the Archive Break Records option is selected. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. For example, /opt/owl/drivers/trino |

|

|

Driver Properties | String |

Optional. The configurable driver properties of your connection. |

Turning on Pushdown for Trino

To ensure that Pushdown for Trino is appropriately configured, verify that PUSHDOWN_JOB_ENABLED is set to TRUE on the Application Config page of the Admin Console.

- On the main menu, click

, and then click Admin Console.

, and then click Admin Console. - Click Application Config under the Configuration Settings section.

- If PUSHDOWN_JOB_ENABLED is set to

FALSE, click the to the right of the Value column.

to the right of the Value column. - In the Edit Configuration modal, enter

TRUEinto the Value input field. - Click Save.

Tip If PUSHDOWN_JOB_ENABLED is already set to TRUE, you can skip this section.

You can now create a Pushdown job on your Trino connection.

Requirements

In Collibra DQ

- You have admin permissions (

ROLE_ADMIN) to set up the SQL Server connection template and enable Pushdown. - You have the SQL Server JDBC driver for Standalone deployments of Collibra DQ.

Note You do not need to install the SQL Server JDBC driver for Installing Data Quality & Observability Classic on self-hosted Kubernetes deployments of Collibra DQ because it is already included in the installation package.

In SQL Server

- You have Read access on your SQL Server database tables.

Configuring Pushdown for SQL Server

The following steps show you how to set up the SQL Server connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the SQL Server tile, then enter the required information.

-

Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your SQL Server connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:sqlserver://${host}:1433/databaseName=${dbName};encrypt=true;trustServerCertificate=true; |

|

|

Port | Integer | The port number to establish a connection to the datasource. The default port is 1433. |

|

|

Driver Name | String | The driver class name used for your connection, for example, com.microsoft.sqlserver.jdbc.SQLServerDriver |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for SQL Server connections, go to the official SQL Server documentation. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your SQL Server connection. Important You must select this option for Pushdown to function. |

|

|

Archive Break Records | Option |

Allows you to write break records directly to a SQL Server database or schema. Note Only rule break records are eligible for source archival. |

|

|

Archive Break Records Location | String |

The break records archive location within SQL Server. If you do not specify a location, then Collibra DQ uses Note Archive Break Records Location is only available and required when the Archive Break Records option is selected. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. For example, /opt/owl/drivers/mssql/ |

|

|

Driver Properties | String |

Optional. The configurable driver properties of your connection. |

You can now create a Pushdown job on your SQL Server connection.

Known limitations

- As of the Collibra DQ 2024.02 release, only AdaptiveRules are available from the Add Layers step.

- Only native Freeform SQL (PQ) is supported for SQLF rules, meaning that queries in the format of

@dataset(TLQ) or@job-name(LQ) are not supported. Only queries that use native (PQ) are supported. - The use of the 'TOP(limit)' clause for the source is not supported when Link ID is enabled for SQLF rules. This is because the 'TOP N' specification should be placed at the beginning of the query. When Link ID is enabled for rules, it is added to the beginning of the query, causing the Insert query to fail.

Requirements

In Collibra DQ

- You have admin permissions (

ROLE_ADMIN) to set up the SAP HANA connection template and enable Pushdown. - You have the SAP HANA JDBC driver for Standalone deployments of Collibra DQ.

Note You do not need to install the SAP HANA JDBC driver for Installing Data Quality & Observability Classic on self-hosted Kubernetes deployments of Collibra DQ because it is already included in the installation package.

In SAP HANA

- You have Read access on the SAP HANA database tables on which you run data quality.

Configuring Pushdown for SAP HANA

The following steps show you how to set up the SAP HANA connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click Add Connection, then click SAP HANA.

- Enter the required information.

- SAP<SID>

- SAPSR3

- SAPBAP<#>

- SAPHANADB

-

Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your SAP HANA connection. |

|

|

Connection URL | String | The base connection string, for example, jdbc:sap://${host}:${port}/ |

|

|

Port | Integer | The port number to establish a connection to the datasource. The default port is 39015. |

|

|

Driver Name | String | The driver class name used for your connection, for example, com.sap.db.jdbc.Driver |

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for SAP HANA connections, go to the official SAP HANAdocumentation. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your SAP HANA connection. Important You must select this option for Pushdown to function. |

|

|

Archive Break Records | Option |

Allows you to write break records directly to a SAP HANA database or schema. |

|

|

Archive Break Records Location | String |

The break records archive location within SAP HANA. If you do not specify a location, then Collibra DQ uses Your default output schema may be one of the following: Note Archive Break Records Location is only available and required when the Archive Break Records option is selected. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. For example, /opt/owl/drivers/sap/ |

|

|

Driver Properties | String |

Optional. The configurable driver properties of your connection. |

The following table contains an overview of the data quality features currently available for Pushdown for SAP HANA.

You can now create a Pushdown job on your SAP HANA connection.

This section shows you how to set up Pushdown for an Oracle connection.

Prerequisites

- You have admin permissions (

ROLE_ADMIN) to set up the Oracle connection template and enable Pushdown. - You have read access on Oracle database tables.

- The Kerberos user has read permissions on Oracle tables (if using Kerberos authentication).

Configuring Pushdown for Oracle

The following steps show you how to set up the Oracle connection template with Pushdown enabled.

Setting up the Oracle connection template

The following steps show you how to set up the Oracle connection template with Pushdown enabled.

- On the main menu, click

, and then click Connections.

, and then click Connections. - Click the Oracle tile, then enter the required information.

-

Click Save.

| Required | Property | Type | Description |

|---|---|---|---|

|

|

Name | String | The unique name of your Oracle connection. |

|

|

Connection URL | String |

The connection string path of your Oracle connection. When referring to the example below, replace the Example Using SID Example Using database service name |

|

|

Port | Integer |

The port number to establish a connection to the datasource. The default port is |

|

|

Driver Name | String |

The driver class name of your connection.

|

|

|

Source Name | String | N/A |

|

|

Target Agent | Option |

The Agent used to submit your DQ Job. Note You do not need to select an option because Pushdown jobs do not require an Agent to submit them to the data warehouse. |

|

|

Auth Type | Option | The method to authenticate your connection. For more information on authentication methods for Oracle connections, go to the official Oracle documentation. |

|

|

Pushdown | Checkbox |

Switches Pushdown processing on or off. Select the checkbox option to turn on Pushdown for your Oracle connection. Important You must select this option for Pushdown to function. |

|

|

Archive Break Records | Option |

Allows you to write break records directly to a Oracle database or schema. |

|

|

Archive Break Records Location | String |

The break records archive location within Oracle. If you do not specify a location, then Collibra DQ uses Note Archive Break Records Location is only available and required when the Archive Break Records option is selected. |

|

|

Driver Location | File path | A file path that designates the name and location of the driver. For example, /opt/owl/drivers/oracle |

|

|

Driver Properties | String |

Optional. The configurable driver properties of your connection. |

You can now create a Pushdown job on your Oracle connection.