1 Where do I find the Job ID and Request ID for AWS troubleshooting?

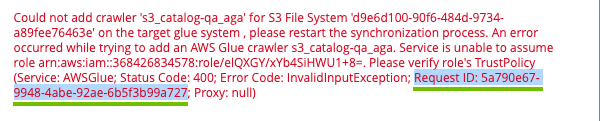

The S3 synchronization results dialog box includes the Job ID. When an S3 synchronization fails, the results includes a detailed error message with the Request ID.

Tip Share the Request ID with AWS support to understand why the specific request is failing in AWS. This is typically useful to troubleshoot IAM permission issues in your AWS environment.

2 Message Could not add/change/delete crawler '<crawler name>' for S3 File System '<asset name>'.

You can find more information about the actual problem in the Jobserver logs. The problem is usually described in the AWS SDK error message.

| Cause | Description | Solution |

|---|---|---|

| Incorrect or too limited IAM permissions for the programmatic user defined in the connection details. |

While connecting, the verification process only checks that the user can log in, but it doesn't verify permissions. Any further operation may therefore fail if the IAM permissions are wrong or too limited. This also applies to the AWS regions. Collibra checks the credentials in the default region, based on the region AWS SDK. Because the IAM service is global, that is sufficient in most cases. However, it is possible to put constraints on specific regions, including the AWS SDK default region. |

Edit the IAM permissions or connect to Amazon S3 with another IAM user or role. |

| Maximum number of crawlers in AWS Glue reached. |

When you synchronize Amazon S3, Collibra creates crawlers in AWS Glue and executes them. After synchronization, they are deleted. By default, each AWS Glue account can only store 1,000 crawlers. |

For more information, see the AWS Glue documentation. |

| Bucket does not exist | Typo in a bucket name - bucket doesn't exist. | Edit the crawler's include path to correct the bucket name. |

| No permission to access the bucket in Amazon S3. | This includes buckets that exist but belong to different accounts. | Request permission or delete the relevant crawler. |

| Unsupported AWS region. | S3 ingestion in Collibra Data Catalog relies on AWS Glue to analyze S3 buckets. However, AWS Glue is currently not supported in all AWS regions, which may lead to failing crawling creation. The log will display an UnknownHostException. | This is a built-in limitation of AWS Glue. For the list of supported regions for AWS Glue, see the AWS documentation. |

|

Incorrect AWS region. |

AWS regions can be restricted so that S3 ingestion and synchronization in Collibra Data Catalog can only be performed in the regions your AWS account has access to. Example You will get an error message when:

|

This is a security measure. The AWS regions to which Collibra Data Catalog is allowed to connect can be restricted via Collibra Console. |

[2018-08-03 13:50:38,347] INFO .agent.SprayRoutesProvider [] [] - output: (500 Internal Server Error,{"messageCode":"s3_bucketDoesntExist","messageArguments":["qsdgqsbqfdscs"]})

Message Value not allowed. The connection details of the S3 File System are incorrect.

| Cause | Description | Solution |

|---|---|---|

| The credentials for the AWS user are incorrect. |

This message appears when the credentials for the AWS user are incorrect. The access key ID and/or secret access key are wrong. |

Pay attention that they do not contain trailing spaces. |

| Your AWS account doesn't have access to an AWS region where the S3 bucket is located. | This message appears when you add an AWS region in Collibra Console to which your AWS account doesn't have access and then try to ingest an S3 file system. | Make sure that you have access to the AWS region where the S3 bucket is located. |

3 Glue Crawler fails with an Internal Service Exception error message

This is an AWS Glue crawler error. For possible steps to resolve the issue, see the AWS documentation.

4 Glue Crawler failed and AWS logs show an Internal server error message

When checking the logs in Jobserver you may notice that one or more crawlers failed in AWS Glue. In that case, you need to open the AWS console and check the crawlers list in AWS Glue. Because crawlers are deleted from AWS Glue after ingestion, you will have to manually re-create the crawlers and run them again before proceeding. The failing crawler has a red exclamation mark and the Failed status. You can check the logs for more information.

Sometimes, the logged message just shows an "Internal server error". The only way to get more information is to contact the Amazon helpdesk. However, we noticed such errors often happen in the following situations

- The number of files to crawl is very large (> 100k)

- There is a series of very small files to crawl (>100).

In both cases, the problem is caused by AWS Glue. All Amazon services are protected against DDoS attacks and they throw throttling exceptions when too many operations are done in a specific time frame. Unfortunately this limit also applies between Amazon services. In this specific case, the AWS Glue database service is denying requests from the AWS Glue crawler service, which causes the crawling process to abort. Because this is an inherent Amazon limitation, Collibra cannot fix this problem. A possible work-around is to use more S3 File System assets with more restricted include paths.

5 Error message The AWS Access Key Id you provided does not exist in our records though credentials are accepted

A user may be able to store S3 credentials in the S3 File System asset, though he cannot synchronize Amazon S3, create, edit or delete crawlers. The following message appears:

The AWS Access Key Id you provided does not exist in our records. (Service: Amazon S3; Status Code: 403; Error Code: InvalidAccessKeyId; ...

This may be caused by insufficient permissions on AWS Glue services. For more information, see About the Amazon S3 file system integration.

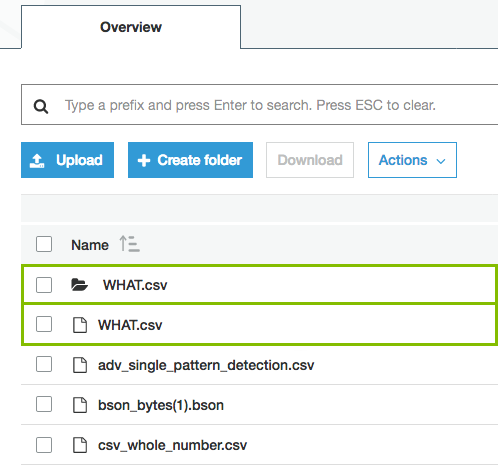

6 Synchronization fails when a directory contains a file and a directory with the same name (known issue)

In Amazon S3, you can use periods (.) in the name of a directory. As a consequence, you can give the directory a name that is identical to a file name, for example, Collibra.txt. However, if this happens, ingestion fails. This is a known issue.

7 Synchronizing an S3 File System fails with a relationMaxLimitReachedTarget message in the logs

This error comes from a broken relation in the assets tree. An asset created by S3 ingestion gets more than one parent asset. For example, a File asset has more than one parent directory or a Directory asset has more than one parent directory.

This typically happens when a user moves S3 assets to a different domain and then starts a synchronization. In that case, the ingestion jobs try to recreate the missing assets in the original domain while old relations are still present. This can lead to an inconsistency in the relation tree.

We strongly recommend that you never move assets created by S3 ingestion to another domain.

You work in domain called Amazon, which contains a Directory asset called Main. The Main Directory asset has a child asset of the File type, called Names.

You move the Main Directory asset to another domain called Local.

When you synchronize again, Data Catalog first recreates the Main Directory asset in the Amazon domain and then it updates the Names File asset.

As a consequence, the Names File has 2 parent directories, which is a relation cardinality error.

8 Synchronizing Amazon S3 fails because you don't have the necessary permissions

In Collibra Platform 2020.11 and newer and Collibra Data Governance Center 5.7.7 and newer, Collibra checks the permissions of the AWS user when you synchronize Amazon S3. Synchronizing Amazon S3 fails if the AWS user does not have the necessary permissions.

A dialog box shows the following message:

Could not get/delete Glue database for S3 File System <name-of-Amazon-S3-file-system>, please make sure you have all the necessary permissions.

Solution: Give the AWS programmatic user the permission policy AWSGlueServiceRole. This is an AWS managed policy. If you don't want to use this out-of-the-box AWS managed policy, you will need to work with AWS support to define a more restrictive policy. Make sure to include glue:BatchGetCrawlers and glue:ListCrawlers.

9 No assets are created after the synchronization job is completed

This is usually because AWS Glue didn't find any suitable files to process. A typical problem is a typo in the include path or exclude patterns. AWS Glue does not fail when an include path points to a directory that doesn't exist. Also, always verify there are no leading or trailing spaces in those fields.

10 Only part of the expected files or file groups are integrated

Jobs in Collibra can only succeed or fail. It's possible that some of the crawlers are correctly defined while others contain errors, such as a typo in an include path or an unsupported AWS region. In that case, the activity is marked as successful, though part of it didn't succeed. Currently, the only way to confirm this is to read the log files of Collibra and Jobserver or Edge.

Note When you start synchronization, the crawlers are created in AWS Glue. Once the crawlers are created, they are executed. If Collibra cannot create one or more crawlers, synchronization fails immediately. If the crawlers are created successfully, but fail later, synchronization only fails if all crawlers fail.

11 Partial ingestion or update of assets

It is possible to store a very large number of files in S3 buckets, hence leading to a large number of assets, attributes and relations to ingest into Data Catalog. To optimize memory and speed, the ingestion process is not transactional as a whole. It works with small transactional batches. If ingestion fails and aborts after some batches are already executed, it is possible that the ingested data is incomplete (if it is the first synchronization) or only partly updated (if it is not the first synchronization). In this case, it's advised to fix the problem and resynchronize as soon as possible.

12 Some of the folders and files in Amazon S3 are not visible in Collibra

You may notice that the content of your Amazon S3 does not always match the content in Collibra. Some folders from Amazon S3 may not appear in Collibra and some files are merged or split into different assets. This is not a bug in Collibra. When you synchronize Amazon S3, you create and execute crawlers in AWS Glue. Those crawlers create a table with metadata. That table is ingested in Collibra and is the basis for the relevant assets.

However, the crawlers in AWS Glue have some specific behavior to deal with partitioned tables. When the majority of schemas at a folder level are similar, the AWS Glue crawler creates partitions of a table instead of separate tables. Based on that information, the assets in Collibra are created.

See the AWS Glue documentation for more information about folders and tables in Amazon S3 and what happens when a crawler runs.

13 JSON ingestion shows partial value in technical data type attributes (known issue)

For security reasons, all values that contain information between < and > characters are automatically trimmed by Collibra. However, if JSON is ingested by AWS Glue, the technical data type attribute contains those characters to represent the JSON structure. As a consequence, the value is trimmed and thus invalid. In future releases of Collibra, several attribute types will be changed to the plain text kind to avoid this issue.

14 The file size or other property is not filled in for file xxx.yyy

AWS Glue only provides the file size for known file types, called "classifiers" in the AWS Glue terminology. Files that are classified as Unknown are registered but won't have any property associated. For the list of built-in classifiers, see the AWS Glue documentation.

15 The table name has a strange hash-code at the end

AWS Glue appends a hash code to differentiate two different files of the same name but different directories, for example, csv_boolean_csv_fe8de80c6f9a2b31463801aa2778a427. This name, including the hash code, is actually transferred to Data Catalog.

16 A file is wrongly considered a File Group

AWS Glue preferably considers a directory as a data set when possible. This leads to a File Group being created in Data Catalog. There are multiple cases where it considers (possibly wrongly) one or more files as a File Group. Unfortunately, those rules are not clearly defined in AWS Glue documentation. Collibra noticed that AWS Glue considers a directory as a data set in the following cases:

- A directory only contains one file that belongs to a known classifier (file type).

- All files contained in a directory (including sub-directories) expose a similar schema (for example, all CSV files with columns of text type).

If you use Jobserver, experiment with include paths and exclude patterns of the crawlers.

For example, if a crawler wrongly takes a directory with subdirectories as a single File Group, the official work-around is to add crawlers with the subdirectories as include paths. Unfortunately, this work-around requires a lot of manual work and is limited by the maximum number of crawlers in AWS Glue (25 by default, but this can be expanded on request).

If you are using Edge, check to solution in the troubleshooting item: File Groups get the status Missing from Source

17 File Groups get the status Missing from Source after the S3 synchronization via Edge

File Group assets can receive the status "missing from source" if the behavior of the AWS crawler is not consistent, meaning AWS classifies files as File Group one day and classifies them as File on another day.

If this happens, File Group assets are created during the first synchronization but no longer exist after the second synchronization, resulting in the status "Missing from source".

Solution:

If you are using Edge, you can add custom parameter file-group-as-file to your S3 Edge capability. By adding the custom parameter, the S3 synchronization will always ingests File groups as File assets. The custom parameter is:

- Name: file-group-as-file

- Value: true

18 Problem setting up Lake Formation and S3 synchronization via Edge

You aren't able to see all S3 buckets when choosing a storage location for which to review, grant or revoke user permissions for Lake Formation. For more details, go to Prepare S3 file system for Edge.

Solution:

Provide extra cross region sharing on AWS side. For information, go to the Lake Formation documentation.

19 Message Resource does not exist or requester is not authorized to access requested permissions when setting up Lake Formation via Edge

When you're adding access permissions for specific storage locations, you receive the following message “Resource does not exist or requester is not authorized to access requested permissions“.

Solution:

Go to AWS Lake Formation → Administration → Administrative roles and tasks and add the IAM user as Data Lake administrator. For more details, go to Prepare S3 file system for Edge.