Release 2024.06

Release Information

- Release date of Data Quality & Observability Classic 2024.06: July 1, 2024

- Publication dates:

- Release notes: June 6, 2024

- Documentation Center: June 14, 2024

Highlights

Important

In the upcoming Data Quality & Observability Classic 2024.07 (July 2024) release, the classic UI will no longer be available.

- Integration

- Users without a Data Quality & Observability Classic license can now use the Quality tab on asset pages in the latest UI of Collibra Platform. Before Data Quality & Observability Classic 2024.06, unless you created data quality rules in Collibra Platform using the Data Quality & Observability Classic integration, the Quality tab would not populate and you could not aggregate data quality across any assets.

Note To enable this Collibra Platform functionality, contact a Collibra Customer Success Manager or open a support ticket.

Additionally, we improved our security standards by adding support for OAuth 2.0 authentication when setting up an integration with Collibra Platform.

Important

Default SAML and SSL keystore are not supported. If you use a SAML or SSL keystore to manage and store keys and certificates, you must provide your own keystore file for both. When using both a SAML and SSL keystore, you only need to provide a single keystore file.

Enhancements

Connections

- When configuring an Amazon S3 connection and setting it as an Archive Breaking Records location, you can now use Instance Profile to authenticate it.

- When setting up a MongoDB connection, you can now use Kerberos TGT Cache to authenticate it.

- You can now use EntraID Service Principal to authenticate Databricks connections.

- Trino Pushdown connections now support Access Token Manager authentication.

- We upgraded the Teradata driver to version 20.0.0.20.

Explorer

- Explorer now fetches a new authentication token after the previous token expires to ensure seamless connectivity to your data source when using Access Token Manager or Password Manager to authenticate Pullup or Pushdown connections.

Jobs

- When using DB connection security and DQ job security, we added the security setting Require Connection Access, which requires users with ROLE_OWL_CHECK to have access to the connection they intend to run jobs on. When DB connection security and DQ job security are enabled, but Require Connection Access is not, users with ROLE_OWL_CHECK can run jobs to which they have dataset access.

Findings

- When exporting Outlier break records containing large values that were previously represented with scientific notation, the file generated from the Export with Details option now exports the true format of these values to match the unshortened, raw source data.

APIs

- Admins and user managers can now leverage the POST /v2/deleteexternaluser call to remove external users.

- You can now add template and data class rules to a dataset with the POST /v3/rules/{dataset} call.

- When you add template and data class rules to a dataset, the templates and data classes must already exist.

- You can use the GET /v3/rules/{dataset} call to return all rules from a datase, then use the POST /v3/rules/{dataset} to add them to the dataset you specify. If you add these rules to a different dataset, you must update the dataset name in the POST call and any references of the dataset name in the rules.

Integration

- You can now map Data Quality & Observability Classic connections containing database views to their corresponding database view assets in Collibra Platform.

- The Details table on the Quality tab of asset pages is now keyboard navigable.

Pushdown

- When rule breaks are stored in the PostgreSQLMetastore with link IDs assigned, you can now download a CSV file containing the details of the rule breaks and link ID columns via the Findings page Rules tab Actions Rule Breaks modal.

- You can now archive rule break records for Trino Pushdown connections.

- You can now download a CSV file from the Findings page containing break records of rule breaks in the Metastore.

Platform

- Data Quality & Observability Classic now supports CentOS 9 and RedHat Enterprise Linux 9.

Latest UI

- All pages within the Data Quality & Observability Classic application with a blue banner are now set to the latest UI by default. Upon upgrade to this version, any REACT application configuration settings from previous versions will be overridden. The following pages are now set to the latest UI by default:

- Login

- Registration

- Tenant Manager Login

- Explorer

- Profile

- Findings

- Rule Builder

- Admin Connections

Fixes

Connections

- We enhanced the -conf spark.driver.extraClassPath={driver jar} Spark config to allow you to run jobs against Sybase datasets that reference secondary Oracle datasets. (ticket #129397)

Explorer

- When using Temp Files in the latest UI, you can now load table entries. (ticket #144174)

- Encrypted data columns with the BYTES datatype are now deselected and disabled in the Select Columns step, and all data displays correctly in Dataset Overview. (ticket #137738)

- When mapping source to target, we fixed an issue with the data type comparison, which previously caused incorrect Column Order Passing results. (ticket #139814, 140349)

- Preview data for remote file connections now displays throughout the application as expected. (ticket #139582,142538, 143876)

- We aligned the /v3/getsqlresult and /v2/getlistdataschemapreviewdbtablebycols endpoints so that Google BigQuery jobs with large numbers of rows do not throw errors when they are queried in Dataset Overview. (ticket #140730, 140915, 141515)

Rules

- We fixed an issue where rules did not display break records because extra spaces were added around the parentheses.

- When the file path of the S3 bucket used for break record archival has a timestamp from a previous run, the second run with the same runId no longer fails with an “exception while inserting break records” error message. (ticket #145702)

- We fixed an issue which resulted in an exception message when “limit” was present in a column name included in the query, for example,

select column_limit_test from public.abc. (ticket #138356)

Alerts

- Dataset-level alerts with multiple conditions no longer send multiple alerts when only one of the conditions is met. (ticket #144655, 146177)

Scheduling

- After scheduling a job to run monthly in the latest UI, the new job schedule now saves correctly. (ticket #143484)

Jobs

- We fixed an issue where only the first page of job logs with multiple pages sorted in ascending or descending order. (ticket #139876)

- The Update Ts (update timestamp) on the Dataset Manager and Jobs page now match after rerunning a job. (ticket #141511)

Agent

- We fixed an issue that caused the agent to fail upon start-up when the SSL keystore password was encrypted. (ticket #140899)

Integration

- We fixed an issue where renaming an integrated dataset, then re-integrating it, caused the integration to fail because an additional job asset was incorrectly added to the object table. (ticket #140286, 140667, 140936, 143281, 143697, 144857)

- After editing an integration with a custom dimension that was previously inserted into the dq_dimension Metastore table, you can now select the custom dimension from the dropdown menu of the Dimensions tab of the Integrations page of the Admin Console. (ticket #137450, 145377)

- The Quality tab is now available for standard assets irrespective of the language. Previously, Collibra Platform instances in other languages, such as French, did not support the Quality tab. (ticket #140433)

- We fixed an issue where rules that reference a column with a name that partially matches another column, for example, "cell" and "cell_phone", were incorrectly mapped to both columns in Collibra Platform. (ticket #84983)

- The integration URL sent to Collibra Platform no longer references legacy Data Quality & Observability Classic URLs. (ticket #139764)

Pushdown

- When the

SELECTstatement of rules created on Snowflake Pushdown datasets uses mixed casing (for example,Select) instead of uppercasing, breaking records now generate in the rule break tables as expected. (ticket #143619, 147953) - We fixed an issue where the username and password credentials for authenticating Azure Blob Storage connections did not properly save in the Metastore, resulting in job failure at runtime. (ticket #131026, 138844, 140793, 142635,145201)

- When a rule includes an @ symbol in its query without referring to a dataset, for example,

select * from @dataset where column rlike ‘@’, the rule now passes syntax validation and no longer returns an error. (ticket #139670)

APIs

- When dataset security is enabled, users cannot call GET /v3/datasetdef or POST /v3/datasetdef. (ticket #138684)

- When

-profoffis added to the command line and the job executes,-datashapeoffis no longer removed from the command line flags when-profoffis removed later. (ticket #140424)

Identity Management

- Users who have dataset access but not connection access can no longer access any dataset Explorer pages. (ticket #138684)

Latest UI

- We resolved an error when creating jobs with Patterns and Outlier checks with custom column references.

- When editing Dupes, columns are no longer deselected when you select a new one.

- Scorecards now support text wrapping so that scorecards with long names fit within UI elements in the latest UI. Additionally, Scorecards now have a character limit of 60 and an error message will display if a scorecard name exceeds it. (ticket #139208)

- Long meta tag names that exceed the width of the column on the Dataset Manager page now have a tooltip to display the full name when you hover your cursor over them.

- We resolved errors modifying existing mapping settings.

- We resolved an error when saving the Data Class when the Column Type is Timestamp.

Limitations

Platform

- Due to a change to the datashapelimitui admin limit in Data Quality & Observability Classic 2024.04, you might notice significant changes to the number of Shapes marked on the Shapes tab of the Findings page. While this will be fixed in Data Quality & Observability Classic 2024.06, if you observe this issue in your Data Quality & Observability Classic environment, a temporary workaround is to set the datashapelimit admin limit on the Admin Console > Admin Limits page to a significantly higher value, such as 1000. This will allow all Shapes findings to appear on the Shapes tab.

- When Archive Break Records is enabled for Azure Databricks Pushdown connections authenticated over EntraID, the data preview does not display column names correctly and shows 0 columns in the metadata bar. Therefore, Archive Break Records is not supported for Azure Databricks Pushdown connections that use EntraID authentication.

Integration

- With the latest enhancement to column mapping, you can now successfully map columns containing uppercase letters and special characters, but columns containing periods cannot be mapped.

DQ Security

Important A high vulnerability, CVE-2024-2961, was recently reported and is still under analysis by NVD. A fix is not available as of now. However, after investigating this vulnerability internally and confirming that we are impacted, we have removed the vulnerable character set, ISO-2022-CN-EXT, from our images so that it cannot be exploited using the iconv function. Therefore, we are releasing Data Quality & Observability Classic 2024.06 with this known CVE without an available fix, and we have confirmed that Data Quality & Observability Classic 2024.06 is not vulnerable.

Additionally, a new vulnerability, CVE-2024-33599, was recently reported and is still under analysis by NVD. Name Service Cache Daemon (nscd) is a daemon that caches name service lookups, such as hostnames, user and group names, and other information obtained through services like DNS, NIS, and LDAP. Because nscd inherently relies on glibc to provide the necessary system calls, data structures, and functions required for its operation our scanning tool reported this CVE under glibc vulnerabilities. Since this vulnerability is only possible when ncsd is present and nscd is neither enabled nor available in our base image, we consider this vulnerability a false positive that cannot be exploited.

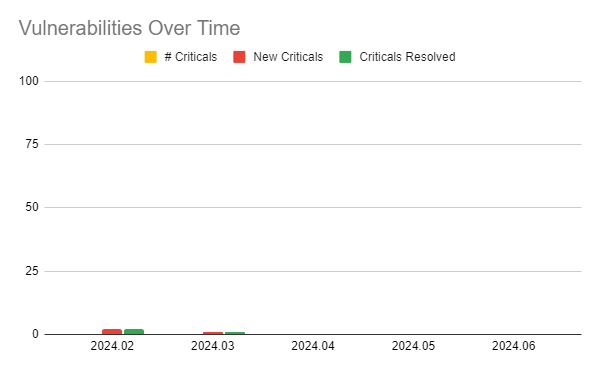

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

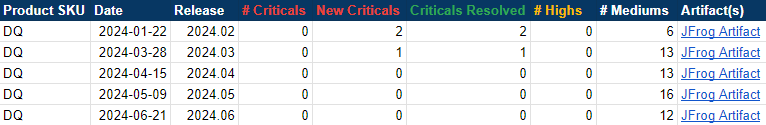

The following image shows a table of Collibra DQ security metrics arranged by release version.