Release 2023.08

Highlights

- Pushdown

We're delighted to announce that Pushdown processing for Databricks is now generally available! Pushdown is an alternative compute method for running DQ jobs, where Collibra DQ submits all of the job's processing directly to a SQL data warehouse, such as Databricks. When all of your data resides in Databricks, Pushdown reduces the amount of data transfer, eliminates egress latency, and removes the Spark compute requirements of a DQ job.

- Pushdown

We're also excited to announce that Pushdown processing for Google BigQuery is now available as a public preview option!

Note The legacy documentation hosted at dq-docs.collibra.com has reached its end-of-life period and now has a redirect link to the official Data Quality & Observability Classic documentation.

New Features

Capabilities

- When reviewing outlier findings, you can now use the Invalidate All option to invalidate all outliers from a given job run in bulk.

- When configuring rule details on the Rule Workbench, you can now define the Scoring Type as either a Percent, which is the default scoring type, or Absolute, which deducts points for breaking rules where the percentage is greater than 0.

- When reviewing rule break findings, you can now select Rule Breaks from the Actions dropdown menu to preview the rule break export file and copy a signed link to the external storage location, giving you more control over how you use and share break records.

DQ Cloud

- When upgrades of DQ Edge sites are required, you can now leverage a utility script to update the Edge DQ version without reinstalling the Edge site.

- We've added the config parameter

licenseSourceto the Collibra DQ Helm chart to make it easier for our internal teams to update DQ Cloud licenses."config"is the default value for DQ Cloud deployments.

Pushdown

- When archiving break records from Databricks Pushdown jobs, you can now write them directly to a database or schema in Databricks.

- When you archive break records to the source warehouse, records are now pruned according to specified parameters to prevent tables or schemas from growing to unreasonable sizes. When a job gets pruned from the jobs table, the source break records from the datasource get pruned as well.

Enhancements

Capabilities

- When scheduling jobs to run automatically, jobs now reflect the runId of the time and timezone you set. Previously, the runId of scheduled jobs reflected the default UTC server time, irrespective of the timezone you set.

- When setting up a connection to a Google BigQuery data source, you can now use the Service Account Credential option to upload a GCP JSON credential file to authenticate your connection. This enhancement means you no longer need to use the workaround of uploading a JSON credential file as a base64 encoded Kerberos secret.

Platform

- The endpoints for the controller-email API have changed. The following endpoints are now available:

- GET /v2/email/server

- POST /v2/email/server

- POST /v2/email/server/validate

- POST /v2/email/group

- GET /v2/email/server/status

- GET /v2/email/groups

Note For more information about the new endpoints, refer to the UI Internal option in Swagger.

Integration

- When using either the Update Integration Credentials or Add New Integration modal to map a connection, the Connections tab now only displays the full database mapping when you click Show Full Mapping, which improves the loading time and enhances the overall experience of the Connections tab.

- Additionally, there is now a Save and Continue button on the Connections tab to ensure your mappings save before proceeding to the next step.

DQ Cloud

- Pendo tracking events no longer contain license_key information when log files are sent to Collibra Console.

- We've improved the performance and resilience of Collibra DQ on Edge sites.

Pushdown

- If Archive Break Records Location is not selected when setting up archive break records from the Connections page, the default schema is now the default schema of the database platform .yaml file. Previously, when a break records output location was not specified, the default location, PUBLIC, would be used.

- When writing custom rules with rlike (regex) operators against Pushdown datasets, exception messages no longer throw when the job runs.

- When running a Pushdown job from the Collibra DQ app, not via API, the correct column count displays on the Findings page. Previously, the v2/run-job-json returned empty columns, which resulted in the total number of columns displayed on the Findings page as 0.

Fixes

Capabilities

- When adding a rule with a string match to a dataset where the string contains parentheses, extra spaces around the parentheses are no longer mistakenly added. (ticket #117055, 118319)

- When selecting a region for an Amazon S3 connection, you can now use AP_SOUTHEAST_3 and AP_SOUTHEAST_4. (ticket #119535)

- When assessing outlier percent change calculations on Findings, the percentage now displays correctly. (ticket #114045)

- When using the out-of-the-box Template rule, Not_In_Current_Run as a dataset rule, an exception no longer throws when the job runs. (ticket #118401)

Platform

- When your Collibra DQ session expires, you are now redirected to the sign-in page. (ticket #111578)

- When migrating DQ jobs from one environment to another, columns that were selected to be included in an outlier check in the source environment now persist to the target environment. Previously, some columns that were selected in the source environment did not persist to the target. (ticket #115224)

- When attempting to edit a completed job on a Redshift connection, the preview limit is now set to 30 rows. Previously, larger datasets experienced long load times or timed out, which prevented you from editing them from Explorer. (ticket #119831, 120245)

- Fixed the Critical CVE CVE-2023-34034 by upgrading the spring library. (ticket #122280)

- When running a job, you no longer receive SQL grammar errors. (ticket #120691)

Known Limitations

Capabilities

- When using the rule breaks capability on the classic Rules Findings tab and rule break records from native rules do not exist in the metastore, the preview modal displays a blank preview and sample file.

- When using the rule breaks capability and the remote archive location does not have write permissions, the exception details of the rule being archived are only visible on the Rules Findings tab.

- After updating the timezone from the default UTC timezone to a different one on a dataset with multiple days of data, the dates on the Findings page charts and Metadata Bar still reflect the default UTC timezone.

- A fix will be included in Collibra DQ version 2023.11.

Pushdown

- The archive break records capability cannot be configured from the settings modal on the Explorer page for BigQuery Pushdown connections.

- When using the archive break records capability, BigQuery Pushdown currently only supports rule break records.

- Additional support is planned for an upcoming release.

- When using the archive break records capability to archive rule breaks generated from freeform rules with explicitly selected columns, and not

SELECT *, you must include the Link ID column in the rule query for break records to archive correctly. - When you select a date column as the column of reference in the time slice filter of a BigQuery dataset, an unsupported data type message displays. While this will be resolved in an upcoming release, a temporary workaround is to use the SQL View option to manually update the source query to reference a date column. For

example, select * from example.nyse where trade_date = safe_cast('${rd}' as DATE)

Example A rule query that includes the Link ID column is SELECT sales_id, cost FROM @dataset WHERE cost < 2000 where "sales_id" represents the Link ID column.

DQ Security Metrics

Note The medium, high, and critical vulnerabilities of the DQ Connector are now resolved.

Warning We found 1 critical and 1 high CVE in our JFrog scan. Upon investigation, these CVEs are disputed by Red Hat and no fix is available. For more information, see the official statements from Red Hat:

https://access.redhat.com/security/cve/cve-2023-0687 (Critical)

https://access.redhat.com/security/cve/cve-2023-27534 (High)

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

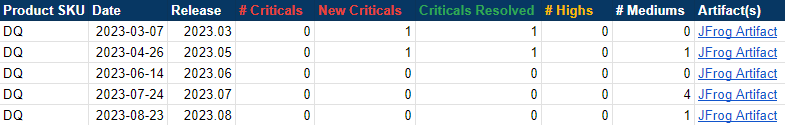

The following image shows a table of Collibra DQ security metrics arranged by release version.

UI Redesign in preview

The following table shows the status of the preview redesign of Collibra DQ pages as of this release. Because the status of these pages only reflects Collibra DQ's internal test environment and completed engineering work, pages marked as "Done" are not necessarily available externally. Full availability of the new preview pages is planned for an upcoming release.

| Page | Location | Status |

|---|---|---|

| Homepage | Homepage |

|

| Sidebar navigation | Sidebar navigation |

|

| User Profile | User Profile |

|

| List View | Views |

|

| Assignments | Views |

|

| Pulse View | Views |

|

| Catalog by Column (Column Manager) | Catalog (Column Manager) |

|

| Dataset Manager | Dataset Manager |

|

| Alert Definition | Alerts |

|

| Alert Notification | Alerts |

|

| View Alerts | Alerts |

|

| Jobs | Jobs |

|

| Jobs Schedule | Jobs Schedule |

|

| Rule Definitions | Rules |

|

| Rule Summary | Rules |

|

| Rule Templates | Rules |

|

| Rule Workbench | Rules |

|

| Data Classes | Rules |

|

| Explorer | Explorer |

In Progress |

| Reports | Reports |

|

| Dataset Profile | Profile |

|

| Dataset Findings | Findings |

|

| Sign-in Page | Sign-in Page |

|

Note Admin pages are not yet fully available with the new preview UI.

UI Limitations in preview

Explorer

- When using the SQL compiler on the dataset overview for remote files, the Compile button is disabled because the execution of data files at the Spark layer is unsupported.

- You cannot currently upload temp files from the new File Explorer page. This may be addressed in a future release.

- The Formatted view tab on the File Explorer page only supports CSV files.

Connections

- When adding a driver, if you enter the name of a folder that does not exist, a permission issue prevents the creation of a new folder.

- A workaround is to use an existing folder.

Admin

- When adding another external assignment queue from the Assignment Queue page, if an external assignment is already configured, the Test Connection and Submit buttons are disabled for the new connection. Only one external assignment queue can be configured at the same time.

Scorecards

- When creating a new scorecard from the Page dropdown menu, because of a missing function, you cannot currently create a scorecard.

- While a fix for this is planned for the September (2023.09) release, a workaround is to select the Create Scorecard workflow from the three dots menu instead.

Navigation

- The Dataset Overview function on the metabar is not available for remote files.