Troubleshooting Standalone Install

This section provides a variety of tips for troubleshooting the Collibra DQ standalone installation process.

setup.sh, go to Data Quality & Observability Classic Directory Structure. setup.sh, owl-env.sh, and owl.properties, go to Additional Standalone Configuration Options.Start and stop components

The owlmanage.sh script enables you to stop and start services or some components of services. It is executed from the /owl/bin directory.

To start different components:

./owlmanage.sh start=postgres

./owlmanage.sh start=owlagent

./owlmanage.sh start=owlwebTo stop different components:

./owlmanage.sh stop=postgres

./owlmanage.sh stop=owlagent

./owlmanage.sh stop=owlweb

To increase memory usage of Java processes in DQ Web, add the following environment variable to owl-env.sh and restart the DQ Web service:

export EXTRA_JVM_OPTIONS="-Xms2g -Xmx2g"To increase memory usage of Java processes in DQ Agent, update owlmanage.sh. In the start_owlagent() function (at line number 47), update the /java command to include the options -Xms2g -Xmx2g. Then, restart the DQ Agent service.

Verify that the working directory has permissions

For example, if I SSH into the machine with user owldq and use my default home directory location /home/owldq/

### Ensure appropriate permissions

### drwxr-xr-x

chmod -R 755 /home/owldqReinstall PostgreSQL

### Postgres data directly initialization failed

### Postgres permission denied errors

### sed: can't read /home/owldq/owl/postgres/data/postgresql.conf: Permission denied

sudo rm -rf /home/owldq/owl/postgres

chmod -R 755 /home/owldq

### Reinstall just postgres

./setup.sh -owlbase=$OWL_BASE -user=$OWL_METASTORE_USER -pgpassword=$OWL_METASTORE_PASS -options=postgresChange PostgreSQL password from SSH

### If you need to update your postgres password, you can leverage SSH into the VM

### Connect to your hosted instance of Postgres

sudo -i -u postgres

psql -U postgres

\password

#Enter new password: ### Enter Strong Password

#Enter it again: ### Re-enter Strong Password

\q

exitWarning The $ symbol is not a supported special character in your PostgreSQL Metastore password.

Add permissions for ssh keys when starting Spark

### Spark standalone permission denied after using ./start-all.sh

ssh-keygen -t rsa -N "" -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keysTip If the recommendation above is unsuccessful, use the following commands instead of ./start-all.sh:./start-master.sh./start-worker.sh spark://$(hostname -f):7077

Change permissions if log files are not writable

### Changing permissiongs on individual log files

sudo chmod 777 /home/owldq/owl/pids/owl-agent.pid

sudo chmod 777 /home/owldq/owl/pids/owl-web.pidGet the hostname of the instance

### Getting the hostname of the instance

hostname -fIncrease thread pool

In some situations, you may need to increase the thread pool and adjust timeout settings. For example:

- The user interface responds slowly.

- The thread pool is exhausted (error logs include PoolExhaustedException).

Update the following DQ Web settings in owl-env.sh. The values provided are for a single-tenant deployment:

export SPRING_DATASOURCE_TOMCAT_MAXIDLE = 10

export SPRING_DATASOURCE_TOMCAT_MAXACTIVE = 20

export SPRING_DATASOURCE_TOMCAT_MAXWAIT = 10000Update the following DQ Agent settings in owl-env.sh. The values provided are for a single-tenant deployment:

export SPRING_DATASOURCE_POOL_MAX_WAIT=500

export SPRING_DATASOURCE_POOL_MAX_SIZE=30

export SPRING_DATASOURCE_POOL_INITIAL_SIZE=5In multiple-tenant deployments, use the following formula to determine the initial size (SPRING_DATASOURCE_POOL_INITIAL_SIZE):

(number of tenants) * 4

For example, if the deployment consists of three tenants, the initial size should be set to (3*4) = 12.

Restart DQ Web and DQ Agent after making changes to the settings.

Jobs stuck in the Staged activity

If DQ Jobs are stuck in the Staged activity on the Jobs page, update the following DQ Web settings in owl-env.sh:

export SPRING_DATASOURCE_POOL_MAX_WAIT=2500

export SPRING_DATASOURCE_POOL_MAX_SIZE=1000

export SPRING_DATASOURCE_POOL_INITIAL_SIZE=150

export SPRING_DATASOURCE_TOMCAT_MAXIDLE=100

export SPRING_DATASOURCE_TOMCAT_MAXACTIVE=2000

export SPRING_DATASOURCE_TOMCAT_MAXWAIT=10000Depending on whether your agent is set to Client or Cluster default deployment mode, you may also need to update the following settings in owl.properties:

spring.datasource.tomcat.initial-size=5

spring.datasource.tomcat.max-active=30

spring.datasource.tomcat.max-wait=1000Be sure to restart DQ Web and DQ Agent after making changes to the settings.

Active database queries

select * from pg_stat_activity where state='active'Too many open files error

### "Too many open files error message"

### check and modify that limits.conf file

### Do this on the machine where the agent is running for Spark standalone version

ulimit -Ha

cat /etc/security/limits.conf

### Edit the limits.conf file

sudo vi /etc/security/limits.conf

### Increase the limit for example

### Add these 3 lines

fs.file-max=500000

* soft nofile 58192

* hard nofile 100000

### do not comment out the 3 lines (no '#'s in the 3 lines above)Path not allowed error

If the following error occurs:

java.nio.file.InvalidPathException: Path not allowed, please contact DQ admin:

Specify the allowed local paths. Add the following entry to owl-env.sh:

export ALLOWED_LOCAL_PATHS='*'You may use the wildcard ('*') to enable all local paths, or specify one or more specific paths separated by a comma.

Redirect Spark scratch

### Redirect Spark scratch to another location

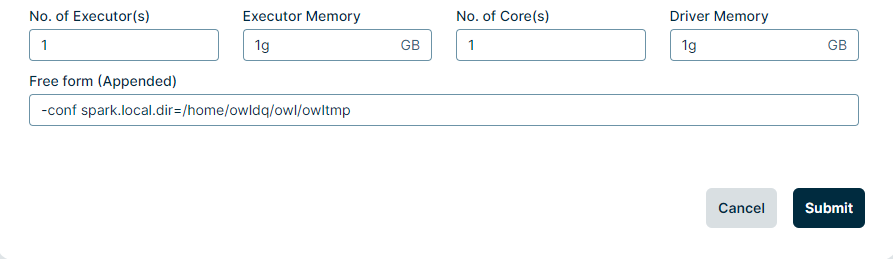

SPARK_LOCAL_DIRS=/mnt/disks/sdb/tmpAlternatively, you can add the following to the Free form (Appended) field on the Agent Configuration page to change Spark storage: -conf spark.local.dir=/home/owldq/owl/owltmp

Automate cleanup of Spark work folders

You can add the following line of code to owl/spark/conf/spark-env.sh, which must be copied from the spark-env.sh.template file, or to the bottom of owl/spark/bin/load-spark-env.sh.

### Set Spark to delete older files

export SPARK_WORKER_OPTS="${SPARK_WORKER_OPTS} -Dspark.worker.cleanup.enabled=true -Dspark.worker.cleanup.interval=1800 -Dspark.worker.cleanup.appDataTtl=3600"Check disk space in the Spark work folder

### Check worker nodes disk space

sudo du -ah | sort -hr | head -5Delete files in the Spark work folder

### Delete any files in the Spark work directory

sudo find /home/owldq/owl/spark/work/* -mtime +1 -type f -deleteTroubleshooting Kerberos

For debug logging, add the following to the owl-env.sh file:

# For Kerberos debug logging

export EXTRA_JVM_OPTIONS="-Dsun.security.krb5.debug=true"Reboot the Collibra DQ web service with the following:

./owlmanage.sh stop=owlweb

./owlmanage.sh start=owlwebNote You can use the option for several purposes such as SSL debugging, setting HTTP/HTTPS proxy settings, setting additional keystore properties, and so on.

Add Spark home environment variables to profile

### Adding ENV variables to bash profile

### Variable 'owldq' below should be updated wherever installed e.g. centos

vi ~/.bash_profile

export SPARK_HOME=/home/owldq/owl/spark

export PATH=$SPARK_HOME/bin:$PATH

### Add to owl-env.sh for standalone install

vi /home/owldq/owl/config/owl-env.sh

export SPARK_HOME=/home/owldq/owl/spark

export PATH=$SPARK_HOME/bin:$PATHSpark launch scripts

For information on working with Spark launch scripts, go to Spark Launch Scripts.

Check that processes are running

### Checking PIDS for different components

ps -aef|grep postgres

ps -aef|grep owl-web

ps -aef|grep owl-agent

ps -aef|grep sparkChange the temp directory

The following steps describe how to change the temp directory used by Collibra DQ.

- Verify that the DQ service user has owner and exec permissions on the directory that you intend to assign.

- Add

sparksubmitmode=nativeto the agent.properties file. - Restart the DQ Agent service.

- Add the following command to the runcmd, replacing

/cdg/dq/owl/tmpwith your directory:Copy-conf spark.driver.extraJavaOptions=-Djava.io.tmpdir=/cdg/dq/owl/tmp,spark.executor.extraJavaOptions=-Djava.io.tmpdir=/cdg/dq/owl/tmpAlternatively, add the following environment variable to the .bashrc file and restart the DQ Agent and Spark services:

Copyexport JAVA_TOOL_OPTIONS="-Djava.io.tmpdir=/cdg/dq/owl/tmp"