Release 2023.10

Release Information

- Expected release date of Data Quality & Observability Classic 2023.10: October 29, 2023

- Publication dates:

- Release notes: October 22, 2023

- Documentation Center: October 27, 2023

Highlights

- Pushdown

We're excited to announce that Pushdown for Trino is now available as a public preview offering! Pushdown is an alternative compute method for running DQ jobs, where Collibra DQ submits all of the job's processing directly to a SQL data warehouse, such as Trino. When all of your data resides in Trino, Pushdown reduces the amount of data transfer, eliminates egress latency, and removes the Spark compute requirements of a DQ job.

Migration Updates

Important This section only applies if you are upgrading from a version older than Collibra DQ 2023.09 on Spark Standalone. If you have already followed these steps during a previous upgrade, you do not have to do this again.

We have migrated our code to a new repository for improved internal procedures and security. Because owl-env.sh jar files are now prepended with dq-* instead of owl-*, if you have automation procedures in place to upgrade Collibra DQ versions, you can use the RegEx replace regex=r"owl-.*-202.*-SPARK.*\.jar|dq-.*-202.*-SPARK.*\.jar" to update the jars.

Additionally, please note the following:

- Standalone Upgrade Steps When upgrading from a Collibra DQ version before 2023.09 to a Collibra DQ version 2023.09 or later on Spark Standalone, the upgrade steps have changed.

- Open a terminal session.

- Move the old jars from the owl/bin folder with the following commands.

- Copy the new jars into the owl/bin folder from the extracted package.

- Copy the latest

owlcheckandowlmanage.shto /opt/owl/bin directory. - Start the Collibra DQ Web application.

- Start the Collibra DQ Agent.

- Validate the number of active services.

mv owl-webapp-<oldversion>-<spark301>.jar /tmp

mv owl-agent-<oldversion>-<spark301>.jar /tmp

mv owl-core-<oldversion>-<spark301>.jar /tmpmv dq-webapp-<newversion>-<spark301>.jar /home/owldq/owl/bin

mv dq-agent-<newversion>-<spark301>.jar /home/owldq/owl/bin

mv dq-core-<newversion>-<spark301>.jar /home/owldq/owl/binTip You may also need to run chmod +x owlcheck owlmanage.sh to add execute permission to owlcheck and owlmanage.sh.

./owlmanage.sh start=owlweb./owlmanage.sh start=owlagentps -ef | grep owlEnhancements

Capabilities

- When using the Mapping (Validate Source in classic mode) activity, we've introduced the following options:

- The Skip Lines (

srcskiplinesfrom the command line) option instructs Collibra DQ to skip the number of lines you specify in CSV source datasets. - The Multi Lines (

srcmultilinesfrom the command line) option instructs Collibra DQ to read JSON source files formatted in multi-line mode.

- The Skip Lines (

- When using the Dataset Manager, you can now filter by Pushdown and/or Pullup connection type.

- When using Pulse View, the Lookback column is now called Last X Days.

Pushdown

- Profiling on Pushdown jobs now uses a tiered approach to determine if a string field contains various string numerics, calculate TopN, BottomN, and TopN Shapes, and detect the scale and precision of double fields.

- When running a job with profiling and other layers enabled, the entire allocated connection pool is now used from the beginning to the end of the job to extract the maximum allowed parallelism. Previously, profiling ran first and had to finish before the activities of any other layers began.

- You can now use the Alert Builder to set up alerts for Athena Pushdown jobs.

DQ Cloud

- DQ Cloud now supports the Collibra DQ to Collibra Platform API-based integration with the same functionality as on-premises deployments of Collibra DQ.

Fixes

Capabilities

- Native SQL rules on connections using Password Manager authentication types now run successfully on Cloud Native deployments of Collibra DQ. (ticket #111493)

- When running a job with a join of 2 datasets, the job no longer incorrectly shows a "Finished" status when the secondary dataset is still in the Spark loading process. (ticket #116004)

- When using Calibrate to modify the start and end dates for jobs with outliers, the dates now save properly. Previously, the calibration dates did not save in the calibration modal. (ticket #120283)

- When creating a job on an Oracle connection, Collibra DQ now blocks SDO_GEOMETRY and other geospatial data types from processing, allowing you to create the job. Previously, these data types prevented the creation of jobs. (ticket #122200)

Platform

- When using a SAML sign-in in a multi-tenant environment, user authentication is now successful when RelayState is unavailable. (ticket #123238)

- When signing into Collibra DQ using the SAML SSO option, you now see all configured tenants. Previously, only one tenant displayed on the SAML SSO dropdown menu. (ticket #124323)

- When specifying database properties containing spaces (' ') in the connection string field of a JDBC connection, the connection URL now properly transcribes string spaces. (ticket #124397)

- When you select the Completeness Report from the Reports page, it now opens as intended. (ticket #123602)

DQ Cloud

- When deleting datasets from Catalog, there is no longer a discrepancy between the number of datasets displayed on the Collibra DQ UI and in PostgreSQL metastore. (ticket #125069)

- Eliminated a spurious error message logged during some normal operations when in Standalone mode.

- You can now use the GET tenant/v3/edges/agents API to retrieve information about your Edge site agents and their statuses.

- OwlCheckQ now synchronizes without error.

Pushdown

- When creating a rule against a Snowflake Pushdown job that references the same dataset twice, the syntax now passes validation. Previously, a Snowflake Pullup rule that referenced the same dataset twice and used the same syntax passed validation, but the Snowflake Pushdown rule did not. (tickets #122364, 122923, 123748)

- When archiving break records to Snowflake, the break records are now properly archived and you no longer receive an error. (tickets #123760, 123987)

Known Limitations

Capabilities

- The Assignments page does not currently filter by date range. However, this functionality is planned for an upcoming release.

Platform

- When using parquet or txt files on the Mapping activity, you must select "parquet" or ".txt," respectively, as the extension. If you use the default "auto" extension option, these file types cannot be used.

DQ Security

Important

We've modified the OS image by removing some of the OS utils, such as curl, to address major vulnerabilities. If you use any of these OS utils in your custom scripts within containers, you need to modify them to use different mechanisms, such as /dev/tcp socket, for the same functions.

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

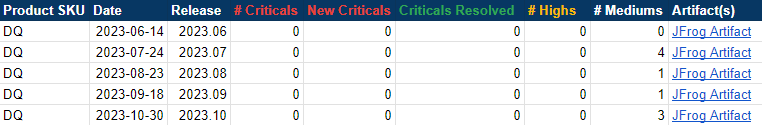

The following image shows a table of Collibra DQ security metrics arranged by release version.

UI in preview

UI Status in preview

The following table shows the status of the preview redesign of Collibra DQ pages as of this release.

| Page | Location | Status |

|---|---|---|

| Homepage | Homepage |

|

| Sidebar navigation | Sidebar navigation |

|

| User Profile | User Profile |

|

| List View | Views |

|

| Assignments | Views |

|

| Pulse View | Views |

|

| Catalog by Column (Column Manager) | Catalog (Column Manager) |

|

| Dataset Manager | Dataset Manager |

|

| Alert Definition | Alerts |

|

| Alert Notification | Alerts |

|

| View Alerts | Alerts |

|

| Jobs | Jobs |

|

| Jobs Schedule | Jobs Schedule |

|

| Rule Definitions | Rules |

|

| Rule Summary | Rules |

|

| Rule Templates | Rules |

|

| Rule Workbench | Rules |

|

| Data Classes | Rules |

|

| Explorer | Explorer |

|

| Reports | Reports |

|

| Dataset Profile | Profile |

|

| Dataset Findings | Findings |

|

| Sign-in Page | Sign-in Page |

|

Note Admin pages are not yet fully available with the new preview UI.

UI Limitations in preview

Explorer

- When using the SQL compiler on the dataset overview for remote files, the Compile button is disabled because the execution of data files at the Spark layer is unsupported.

- You cannot currently upload temp files from the new File Explorer page. This may be addressed in a future release.

- The Formatted view tab on the File Explorer page only supports CSV files.

- When creating a job, the Estimate Job step from the classic Explorer is no longer a required step. However, if incorrect parameters are set, the job may fail when you run it. If this is the case, return to the Sizing step and click Estimate next to Job Size before you Run the job.

Connections

- When adding a driver, if you enter the name of a folder that does not exist, a permission issue prevents the creation of a new folder.

- A workaround is to use an existing folder.

Admin

- When adding another external assignment queue from the Assignment Queue page, if an external assignment is already configured, the Test Connection and Submit buttons are disabled for the new connection. Only one external assignment queue can be configured at the same time.

Profile

- When adding a distribution rule from the Profile page of a dataset, the Combined and Individual options incorrectly have "OR" and "AND" after them.

- When using the Profile page, Min Length and Max Length does not display the correct string length. This will be addressed in an upcoming release.

Navigation

- The Dataset Overview function on the Metadata Bar is not available for remote files.

- The Dataset Overview modal throws errors for the following connection types:

- BigQuery (Pushdown and Pullup)

- Athena CDATA

- Oracle

- SAP HANA

- The Dataset Overview function throws errors when you run SQL queries on datasets from S3 and BigQuery connections.