Release 2023.06

Highlights

- When running rules against datasets that return many breaking records, you can now optionally export the records to an external Amazon S3 or Azure storage bucket instead of writing them to the PostgreSQL Metastore. By offloading break records to cloud storage, you have more control over how you manage the results of your DQ jobs, and you can store data in the supported remote connection of your choice.

- When building SQL rules to run against a dataset, you can now use the new Rule Workbench to create, edit, and preview SQL conditions to help verify that your data adheres to your organization's business requirements.

Enhancements

Capabilities

- You can now scan data stored in Trino using the Starburst Trino JDBC connection.

- Ensure that your connection URL contains the following substring: &source=jdbc:presto. For example,

jdbc:trino://example-host.trino.galaxy.starburst.io:443/sample?SSL=true&source=jdbc:presto

- Ensure that your connection URL contains the following substring: &source=jdbc:presto. For example,

- When working with remote file connections, you can now leverage the Hudi Spark connector to scan Hudi files.

- When using the Estimate Job feature to gauge the resource requirements of a Spark job, you can now set

partitionautocalto true on the Admin Limits settings page to automatically calculate Spark partitioning for optimal performance results. - The Estimate Job feature now estimates the overall memory requirements instead of the memory per executor.

- You can now use the Data Class and Template Rules pages to manage out-of-the-box and custom Data Class and Template rules.

- The dayswithoutData and runswithoutData stat rules now count Day 0 and Run 0 as the first day and run without data.

- The dayswithoutData stat rule now counts days with stale data for 365 days instead of 100.

- When using Source to check a column for mismatched data, you can now select the Strict Source Downscoring option to include

-validatevaluesthresholdstrictdownscorein your query during Source setup and base the downscore value on the number of cell mismatches found across all columns. When using this option, you must also assign a key column for source validation. - When a large number of Dupes are found in a DQ job run, the getDataPreviewByObsType API now has improved performance and no longer times out.

Note The Hudi Spark connector requires a separate package to work properly due to a security vulnerability in the Hudi bundle jar. Please reach out to your CSM for more information about accessing this package.

Platform

- When adding a new dataset from a Google BigQuery connection, you can now successfully import, query, and identify EXTERNAL table types.

- To address the conflict of JAASConf secret deletion with DQ jobs having longer Spark launch times for Driver and Executor pods, we adjusted the DQ agent's clean-up routine. (ticket #109256)

Pushdown

- When configuring the settings of a Pushdown job, you can now choose to log all SQL queries for a dataset by turning SQL Logging on or off from the Settings modal.

- When reviewing the Value column for Outliers on the Findings page, low-precision values now truncate after 2 decimal places when all the trailing values are 0. For example, 3780.0000000 now truncates to 3780.00. High-precision values, such as 162.9583789, still display all decimal values.

- When adding ML layers for Databricks Pushdown jobs, you can now configure numerical outliers. Support for categorical outliers is planned for an upcoming release.

- Because Pushdown is not yet available for DQ Cloud, the Pushdown checkbox option on the Databricks and Snowflake connection templates is temporarily unavailable when setting up a connection on a DQ Cloud deployment.

DQ Cloud

- When admin or tenant admin users need to view the state of an Edge site identifier graph, you can now use the following APIs:

- As admin

- GET /v3/diagnostics/edgeSiteIdCache returns a text/plain body with a graph when successful.

- DELETE /v3/diagnostics/edgeSiteIdCache returns no response body when successful.

- As admin

- As tenant admin

- GET /tenant/v3/diagnostics/edgeSiteIdCache/{tenant} returns a text/plain body with a graph when successful.

- DELETE /tenant/v3/diagnostics/edgeSiteIdCache/{tenant} returns no response body when successful.

Note The tenant admin API works only for the {tenant} where the user is tenant admin.

Fixes

Capabilities

- When viewing Outlier records on the Findings page, you can again drill into individual records to view the associated graph. (ticket #110281)

- Rules with null characters in the data again return rule breaks. Previously, null characters caused rule break inserts into the Metastore to return exception messages. (ticket #103402)

- When exporting rule breaking records to Excel, integer values from Collibra DQ no longer display as decimal values in the Excel export file. (ticket #112830)

- Fixed an issue where Shapes detection incorrectly assigned non-numeric text as numeric scientific notation. (ticket #113704)

Platform

- When using an SSO configuration to sign into Collibra DQ, you no longer get errors when you attempt to sign in. The refactoring in this fix initially looks up a user profile by username, then by external user ID, before attempting to lookup by externalUserId until a user is found and returned. (ticket #109171)

- When drilling into a column on the Profile page, Min and Max string length again displays the correct values. (ticket #110044)

- When reviewing the Data Summary Report, the Table/File Name column now displays the correct name. (ticket #111508)

- Fixed the builds for the 2023.05 Collibra DQ version. Previously, jobs failed with an "Unknown" status during the Alerts activity and showed a "NoClassDefFoundError" in the job log. (ticket #115956, 116052)

- When running jobs on existing datasets with alerts configured, jobs no longer fail with an error when the ALERT_SCHEDULE_ENABLED option is set to TRUE. (ticket #112837)

DQ Cloud

- When drilling into rule break records on the Findings page, data preview records again display correctly. (ticket #114162)

- When reviewing the Completeness Report, data now displays correctly when you load the page and update as the inputs change. (ticket #115312)

Pushdown

- When using query wrapping in Snowflake Pushdown profiling, you can now successfully run a profile scan without errors. (ticket #111977)

Known Limitations

Capabilities

- When using BigQuery datasets and tables with names that begin with a number, there is a limitation that causes DQ jobs to fail.

- A workaround is to wrap the dataset or table name with back quotes

`and then update the scope before you run the DQ job. For example,select * from `321_apples.example`andselect * from `samples.311_service_requests`

- A workaround is to wrap the dataset or table name with back quotes

- When using the Rule Template to build SQL rules, there is a limitation with SQLF type rules where an extra select clause is added into the query when:

- SQL keywords, such as

select,from, andwhereare not lowercase.- A workaround is to write all keywords in lowercase.

- "@DATASET", "@dataset", and "DATASET" are used to reference a dataset.

- Instead, use "dataset" in all lowercase letters without an "@" symbol.

- SQL keywords, such as

- When exporting break records to external storage buckets, there is a limitation that prevents support for the feature on Amazon S3 and Azure connections that use SAML and Instance Profile authentication.

- A workaround is to use Access Key authentication instead.

- When using the getRuleBreaksCreateTable API to create a table statement for break records exported to an external storage bucket, there is currently a limitation that prevents the return of the proper response. While this issue will be addressed in the 2023.07 release, this API is not intended to be used at this time.

Platform

-

When leveraging the Spark 3.2.2 standalone installation that comes with the AWS marketplace installation, there is a limitation due to a jar mismatch for the AWS S3 Archive feature. The issue is that the existing hadoop-aws.3.2.1.jar file is incompatible with the feature.

-

A workaround is to update the hadoop-aws-3.2.1.jar to hadoop-aws-3.3.1.jar in the spark/jars directory. The necessary .jar file can be obtained from the following link: Apache Downloads.

Note If you encounter any difficulties locating the necessary jar file on the Apache Downloads page, contact your CS or SE for assistance.

-

DQ Cloud

- DQ Cloud does not currently support SAML configuration.

- When reviewing the Completeness Report, new data only displays correctly after you upgrade your Collibra DQ Cloud instance to version 2023.05.2 or later.

- If an Edge site needs to be reinstalled, you must use the original PostgreSQL metastore database or metastore corruption may occur in Collibra DQ. If necessary, restore the metastore database from a backup before reinstalling. Ensure that the installation command line parameter

collibra_edge.collibra.dq.metastoreUrlpoints to the correct database.

DQ Security Metrics

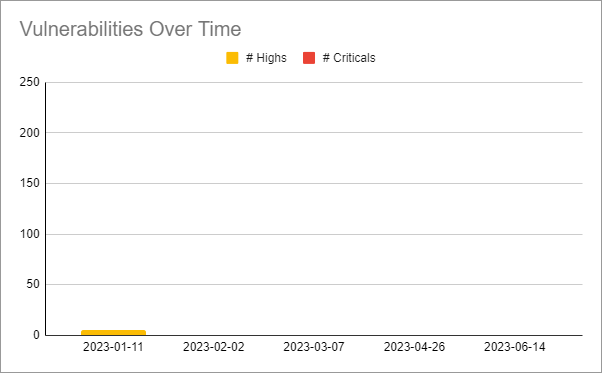

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

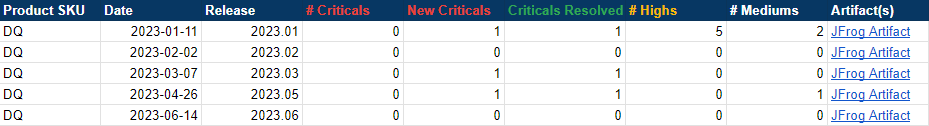

The following image shows a table of Collibra DQ security metrics arranged by release version.

MUI Redesign

The following table shows the status of the MUI redesign of Collibra DQ pages as of this release. Because the status of these pages only reflects Collibra DQ's internal test environment and completed engineering work, pages marked as "Done" are not necessarily available externally. Full availability of the new MUI pages is planned for an upcoming release.

| Page | Location | Status |

|---|---|---|

| Homepage | Homepage |

|

| Sidebar navigation | Sidebar navigation |

|

| User Profile | User Profile |

|

| List View | Views |

|

| Assignments | Views |

|

| Pulse View | Views |

|

| Catalog by Column (Column Manager) | Catalog (Column Manager) |

|

| Dataset Manager | Dataset Manager |

|

| Alert Definition | Alerts |

|

| Alert Notification | Alerts |

|

| View Alerts | Alerts |

|

| Jobs | Jobs |

|

| Jobs Schedule | Jobs Schedule |

|

| Rule Definitions | Rules |

|

| Rule Summary | Rules |

|

| Rule Templates | Rules |

|

| Rule Workbench | Rules |

In Progress |

| Data Classes | Rules |

|

| Explorer | Explorer |

In Progress |

| Reports | Reports |

In Progress |

| Dataset Profile | Profile |

In Progress |

| Dataset Findings | Findings |

|

| Sign-in Page | Sign-in Page |

|