Release 2024.03

Release Information

- Release date of Collibra Data Quality & Observability 2024.03: April 1, 2024

- Publication dates:

- Release notes: March 8, 2024

- Documentation Center: March 11, 2024

Enhancements

Capabilities

- Admins can now view monthly snapshots of the total number of active datasets in the new Datasets column on the Admin Console

Usage page. Additionally, Columns statistics are no longer counted twice when you edit and re-run a dataset with different columns.

Usage page. Additionally, Columns statistics are no longer counted twice when you edit and re-run a dataset with different columns. - Admins can now optionally remove “Collibra Data Quality & Observability” from alert email subjects. When removing the Collibra Data Quality & Observability from the subject, you must fill in all the alert SMTP details to use the alert configuration checkboxes on the screen. By removing Collibra Data Quality & Observability from the alert subject, you can now set up your own services to automatically crawl Collibra DQ email alerts.

- Dataset-level alerts, such as Job Completion, Job Failure, and Condition Alerts, as well as global-level alerts for both Pullup and Pushdown Job Failure now send incrementally from the auto-enabled alert queue.

- We've also added new functionality where, when an alert fails to send, it will still be marked as

email_sent = truein thealert_qMetastore table. However, no email alerts will be sent as a result of this. An enhancement to automatically clean thealert_qtable of stale alerts marked asemail_sent = trueis scheduled for the upcoming Collibra Data Quality & Observability 2024.05 release. - We've optimized internal processing when querying Trino connections by passing the catalog name in the Connection URL. The catalog name can be set by creating a Trino connection from Admin Console

Connections and adding

Connections and adding ConnCatalog=$catalogNameto the Connection URL. - We've added a generic placeholder in the Collibra DQ Helm Charts to allow you to bring in additional external mount volumes to the DQ Web pod. Additionally, when enabled in the DQ check logs and external mount volumes, the persistent volumes provisioned to set the storage class of the persistent volume claims for Collibra DQ now include a placeholder value to allow you to specify the storage class type. This provides an option to bring Azure vault secrets as external mount volumes into the DQ Web pod.

Important As a result of this enhancement, following an upgrade to Collibra Data Quality & Observability 2024.03, any old, unsent alerts in the alert queue will be sent automatically. This is a one-time event and these alerts can be safely ignored.

The following shows the Helm values.yaml file to mount the Kubernetes secret "extra-secret" at the DQ Web pod location "/mnt":

...

web:

extraStorage:

volumes:

- name: extra-secret

secret:

defaultMode: 493

secretName: extra-secret

volumeMounts:

- mountPath: /mnt

name: extra-secret

...You can set the storage class for the DQ Web PVC with the following Helm value:

--set global.persistence.web.storageClassName=gp3Platform

- We now support Cloud Native Collibra DQ deployments on OpenShift Container Platform 4.x.

- When using a proxy, SAML does not support URL-based metadata. It can only support file-based metadata. To ensure this works properly, set the property

SAML_METADATA_USER_URL=falsein the owl-env.sh file for Standalone deployments or DQ-web ConfigMap for Cloud Native. - We removed the Profile Report, as all of its information is also available on the Dataset Findings Report.

Fixes

Capabilities

- We fixed an issue that caused Redshift datasets that referenced secondary Amazon S3 datasets to fail with a 403 error. (ticket #132975)

- On the Profile page of a dataset, the number of TopN shapes now matches the total number of occurrences of such patterns. (ticket #133817)

- When deleting and renaming datasets from the Dataset Manager, you can now rename a dataset using the name of a previously deleted one. (ticket #132799)

- When renaming a dataset from the Dataset Manager and configuring it to run on a schedule, the scheduled job no longer combines the old and new names of the dataset when it runs. (ticket #132798)

- On the Role Management page in the latest UI, roles must now pass validation to prevent ones that do not adhere to the naming requirements from being created. (ticket #133497)

- When creating a Trino dataset with Pattern detection enabled, date type columns are no longer cast incorrectly as varchar type columns during the historical data load process. (ticket #132478)

- On the Connections page in the latest UI, the input field now automatically populates when you click Assume Role for Amazon S3 connections. (ticket #132323, 132423)

- On the Findings page, when reviewing the Outliers tab, the Conf column now has a tooltip to clarify the purpose of the confidence score. (ticket #129768)

- When DQ Job Security is enabled and a user does not have ROLE_OWL_CHECK assigned to them, both Pullup and Pushdown jobs now show an error message “Failed to load job to the queue: DQ Job Security is enabled. You do not have the authority to run a DQ Job, you must be an Admin or have the role Role_Owl_Check and be mapped to this dataset.” (ticket #133623)

- When creating a DQ job on a root or nested Amazon S3 folder without any files in it, the system now returns a more elegant error message. (ticket #134187)

- On the Profile page in the latest UI, the WHERE query is now correctly formed when adding a valid values quick rule. (ticket #133455)

Platform

- When viewing TopN values, the previously encrypted valid values now decrypt correctly. (ticket #131951)

- When a user with ROLE_DATA_GOVERNANCE_MANAGER edits a dataset from the Dataset Manager, the Metatags field is the only field such a user can edit and have their updates pushed to the Metastore. (ticket #132468, 135889)

- We fixed an issue with the /v3/datasetDefs/{dataset} and /v3/datasetDefs/{dataset}/cmdLine APIs that caused the -lib, -srclib, and -addlib parameters to revert in the command line. (ticket #131281)

Pushdown

- When casting from one data type to another on a Redshift dataset, the job now runs successfully without returning an exception message. (ticket #128718)

- When running a job with outliers enabled, we now parse the source query for new line characters to prevent carriage returns from causing the job to fail. (ticket #132322)

- The character limit for varchar columns is now 256. Additionally, to prevent jobs from failing when a varchar column exceeds the 256-character limit. (ticket #131355)

- BigQuery Pushdown jobs on huge datasets of more than 10GB no longer fail with a “Response too large” error. (ticket #134643, 135504)

- When running a Pushdown job with archive break records enabled without a link ID assigned to a column, a helpful warning message now highlights the requirements for proper break record archival. (ticket #132545)

- The Source Name parameter on the Connections template for Pushdown connections now persists to the /v2/getcatalogandconnsrcnamebydataset API call as intended. (ticket #132334)

Limitations

- TopN values from jobs that ran before enabling encryption on the Collibra DQ instance are not decrypted. To decrypt TopN values after enabling encryption, re-run the job once encryption is enabled on your Collibra DQ instance.

DQ Security

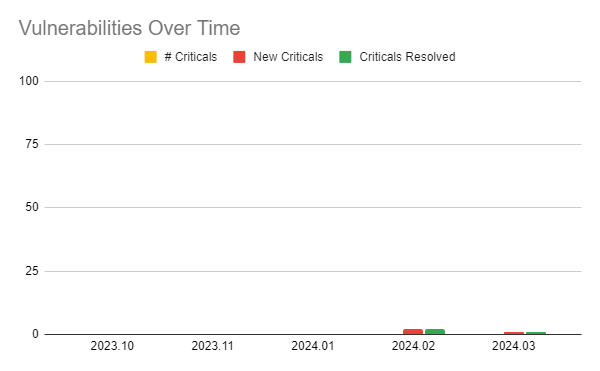

The following image shows a chart of Collibra DQ security vulnerabilities arranged by release version.

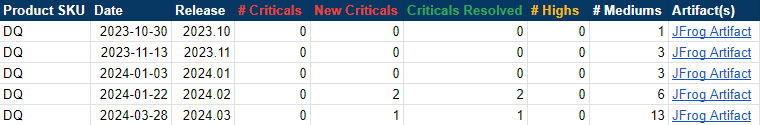

The following image shows a table of Collibra DQ security metrics arranged by release version.