Important Mapping is only available in Pullup mode and for Snowflake connections in Pushdown mode between datasets from the same Snowflake Pushdown connection. If you are using other connections in Pushdown mode, proceed to the next available step.

Mapping allows you to discover inconsistencies between the columns from one database, file, or remote file and those of another. Mapping checks for inconsistencies such as row count, schema, and value differences in data copied from data sources to target tables. By mapping data from the source system to a different target system, you can protect against the two systems from getting out of sync from one another.

Note Mapping is disabled by default.

Steps

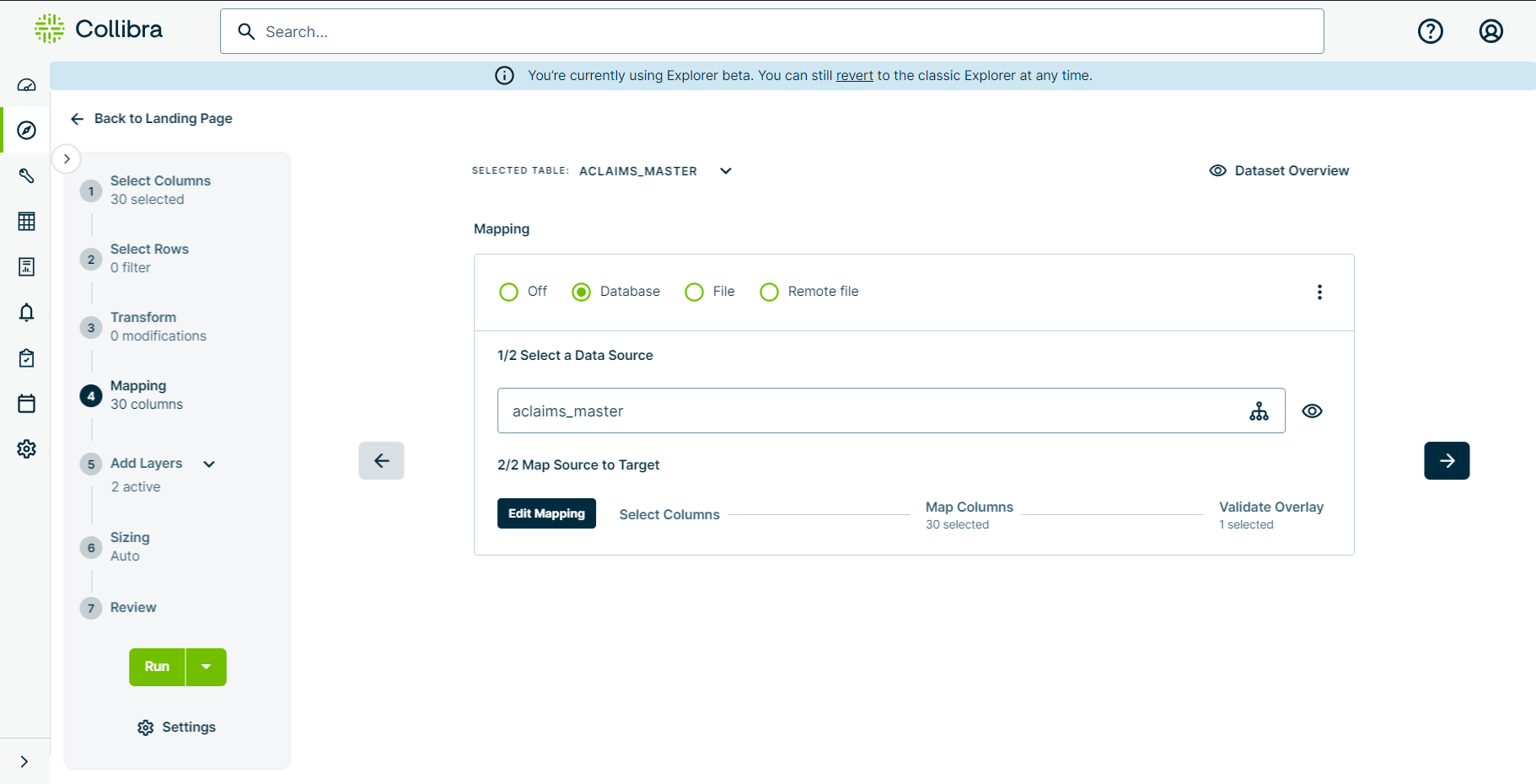

- On the stepper, click Mapping.

The Mapping step opens. - Select one of the following options and follow the steps accordingly.

- Select the Database option.

- Under the Select a Data Source section, click the Select a resource dropdown menu.

A list of available database connections appears. - Select the source database from the list of connections to which your target columns will map for source validation.

- Target Column Missing in Source: If a selected column exists in the target dataset but not in the source, the resulting outer join table will display "null" for that column in the source dataset.

- Source Column Missing in Target: If a selected column exists in the source dataset but not in the target, the resulting outer join table will display "null" for that column in the target dataset.

- Mismatch Identification and Key Extraction: The extracted keys will be "null" for any source record that lacks a matching target record in the selected columns. The displayed keys are only from the target dataset.

- Select the File option.

- Under the Select File Location section, select a file protocol option from the Protocol dropdown menu, then enter the folder in the Folder input field where the file is stored. Alternatively, click the Use Temporary file dropdown menu to use a temp file.

- Under the Select a Data Source section, click the Select a resource dropdown menu.

A list of available file-based connections appears. - Select the source file from the list of file-based connections to which your target columns will map for source validation.

- Click the Delimiter dropdown menu and select a delimiter option reflective of how columns are separated in your file.

- Optionally select the Add Header option and add comma-separated column names in the Column Names input field below.

- Optionally select Bypass Schema Evaluation if Spark is unavailable.

- Select the Remote file option.

- Under the Select a Data Source section, click the Select a resource dropdown menu.

A list of available database connections appears. - Select the source remote file from the list of file-based connections to which your target columns will map for source validation.

- Click the Delimiter dropdown menu and select a delimiter option reflective of how columns are separated in your file.

- Collibra DQ automatically detects the extension type, but you can manually select one by clicking the Extension dropdown and selecting an option.

- Click Load Schema.

- Click Start Mapping.

The Column Selector modal appears. The Column Selector shows the available columns in your target dataset and includes details about the data type present in each column. - Optionally click Enable Timeslice and select a Timeslice Column from the dropdown menu.

This adds a${rd}to your query, where the datetime value is substituted at runtime. - Select the columns to include in the mapping using the checkbox options next to each column name.

- Click To Mapper in the lower right corner.

The Source to Target Mapper appears. The Source to Target Mapper allows you to map source columns on the left side of the screen to their targets on the right. - In the Target Column, click the dropdown menu within a given row and choose the columns you wish to map to the source column within the corresponding row. If the automapping that Collibra DQ suggested is correct, you can proceed to the next step without making modifications.

- Click Validate Overlay.

The Overlay Preview appears. The Overlay Preview shows you the source to target mapping based on your selections in the previous steps. A helpful legend is available in the upper right corner of the screen. - Optionally select the Key checkbox at the top of any of the columns to assign them as key columns.

- Click Validate.

The main Mapping screen displays the number of mapped columns in the stepper. - Continue to the next step on the stepper or click the right arrow on the right side of the page.

Tip You can click ![]() to open Data Preview for a snapshot of the data you selected.

to open Data Preview for a snapshot of the data you selected.

An outer join is used to find discrepancies between source and target datasets and to ensure all records from both datasets are included in the resulting table, even if they don't have matching values in the other dataset. Keys are used to determine which rows should be joined together.

Tip You can click ![]() to open the Data Preview where you can see a preview of the data you selected.

to open the Data Preview where you can see a preview of the data you selected.

Note Bypass Schema Evaluation is a way to avoid loading a SparkSession for CSV and TXT files. You should only select this option when Spark is unavailable or you are unable to load Spark.

Tip You can click ![]() to open the Data Preview where you can see a preview of the data you selected.

to open the Data Preview where you can see a preview of the data you selected.

Note Depending on the extension type, there may be additional options that appear when you make your selection. See the table below for reference.

| Option | Description | .csv | .txt | .orc | parquet | avro | json | delta | xml | hudi |

|---|---|---|---|---|---|---|---|---|---|---|

| Skip Lines | Add a numerical value to instruct Collibra DQ on the number of lines (rows) to skip. These are the lines in your file after the non-data information ends and the data to include in your job begins. |

|

|

|

|

|

|

|

|

|

| Add Header | Denotes that the file contains a header row. |

|

|

|

|

|

|

|

|

|

|

Column Names

|

A comma-separated list of the header column names. For example, Date,Name,Title |

|

|

|

|

|

|

|

|

|

| Bypass Schema Evolution | A method to avoid loading a SparkSession for CSV and TXT files. You should only select this option when Spark is unavailable or you are unable to load Spark. |

|

|

|

|

|

|

|

|

|

| Flatten | Refers to the level of nesting in your file. |

|

|

|

|

|

|

|

|

|

| Multiline | Refers to the content of the file being more than one line. |

|

|

|

|

|

|

|

|

|

Note The Column Selector step allows advanced SQL users to optionally click the ![]() in the upper right corner to switch to SQL View for manual SQL compilation.

in the upper right corner to switch to SQL View for manual SQL compilation.

Note All columns are selected by default.

Tip If your source columns contain white spaces, they may not map properly to the target columns. To prevent mismatches, replace spaces with underscores (_) on the command line.

Note Collibra DQ automaps columns it detects as matches between the source and target.

Tip You can use rules with secondary datasets to improve performance while validating and writing a high number of failure records/break records to your choice of blob storage.

Mapping Configuration

You can click ![]() in the upper right corner of the Mapping main screen to apply different configuration options. The following table shows the available options.

in the upper right corner of the Mapping main screen to apply different configuration options. The following table shows the available options.

| Option | Description |

|---|---|

| Pushdown Count | Runs COUNT (*) FROM <database> to limit the rows included in the mapping query to only those that meet the Pushdown Count criteria. This can increase the efficiency of your DQ job by preventing data that does not meet the criteria of Pushdown Count from being included in the Spark compute tier. |

| Check case | Checks for case sensitivity when comparing source and target schemas by creating a new mapping to instruct Collibra DQ about the case-sensitive source to target mapping. |

| Order |

Checks for schema order consistency when comparing source and target schemas. Note

|

| Check type | Checks for schema type consistency when comparing source and target schemas. |

| Validate values |

Unavailable when Pushdown Count is enabled. Checks for cell value when comparing source and target cells. If no rows are present in either the source or target data source, the cell value comparison activity is skipped when the job runs. Note This requires a large amount of computational resources. |

| Validate for matches | Unavailable when Pushdown Count is enabled. Checks for matching instead of mismatching cell values when comparing source and target cells. |

| Trim | Unavailable when Pushdown Count is enabled. Removes leading and trailing spaces from strings to improve data cleanliness when comparing source and target cells. |

| Ignore null | Ignore NULL values in match findings. This option is available if a key is selected in the Select Columns step. |

| Ignore empty | Ignore empty (““) values in match findings. This option is available if a key is selected in the Select Columns step. |

| Missing Keys | Unavailable when Pushdown Count is enabled. Shows keys values that are found in one data source but missing in the other. |

| Ignore Precision | Ignores precise matches between the total number of digits in numeric data type columns when comparing source and target schemas. |

| Strict Source Downscoring | Bases the downscore value on the number of cell mismatches across all columns. For example, if there are 20 cell mismatches, then 20 points are deducted from the overall data quality score. |

| Show all | Show all the column values that match and mismatch. Reports more than one Source finding per row. This option is available if a key is selected in the Select Columns step. |

| Threshold | Set a percent threshold for column target matches (if Validation for matches is selected) or mismatches (if Validation for matches is not selected). This option is available if a key is selected in the Select Columns step. |

Note Source queries that contain WITH UR are only supported by Db2 connections.