Run a data quality check on a Hive table. Use the -hive flag for a native connection via the HCat, this does not require a JDBC connection and is optimized for distributed speed and scale.

Hive Native, no JDBC Connection

Open source platforms like HDP, EMR and CDH use well known standards and because of this DQ takes advantage of things like HCat and it removes the need for JDBC connection details as well as offers optimum data read speeds. DQ recommends and supports this with the -hive flag.

./owlcheck -ds hive_table -rd 2019-03-13 \

-q "select * from hive_table" -hiveExample output. A hoot is a valid JSON response

{

"dataset": "hive_table",

"runId": "2019-02-03",

"score": 100,

"behaviorScore": 0,

"rows": 477261,

"prettyPrint": true

}Hive JDBC

- You need to use the hive JDBC driver, commonly org.apache.hive.HiveDriver.

- You need to locate your driver JDBC Jar with the version that came with your EMR, HDP or CDH

- This jar is commonly found on an edge node under /opt/hdp/libs/hive/hive-jdbc.jar etc...

./owlcheck -rd 2019-06-07 -ds hive_table \

-u <user> -p <pass> -q "select * from table" \

-c "jdbc:hive2://<HOST>:10000/default" \

-driver org.apache.hive.HiveDriver \

-lib /opt/owl/drivers/hive/ \

-master yarn -deploymode clientHDP Driver - org.apache.hive.HiveDriver

CDH Driver - com.cloudera.hive.jdbc41.Datasource

For CDH all the drivers are packaged under, HiveJDBC41_cdhversion.zip.

Troubleshooting

A common JDBC connection is hive.resultset.use.unique.column.names=false.

This can be added directly to the JDBC connection url string or to the driver properties section.

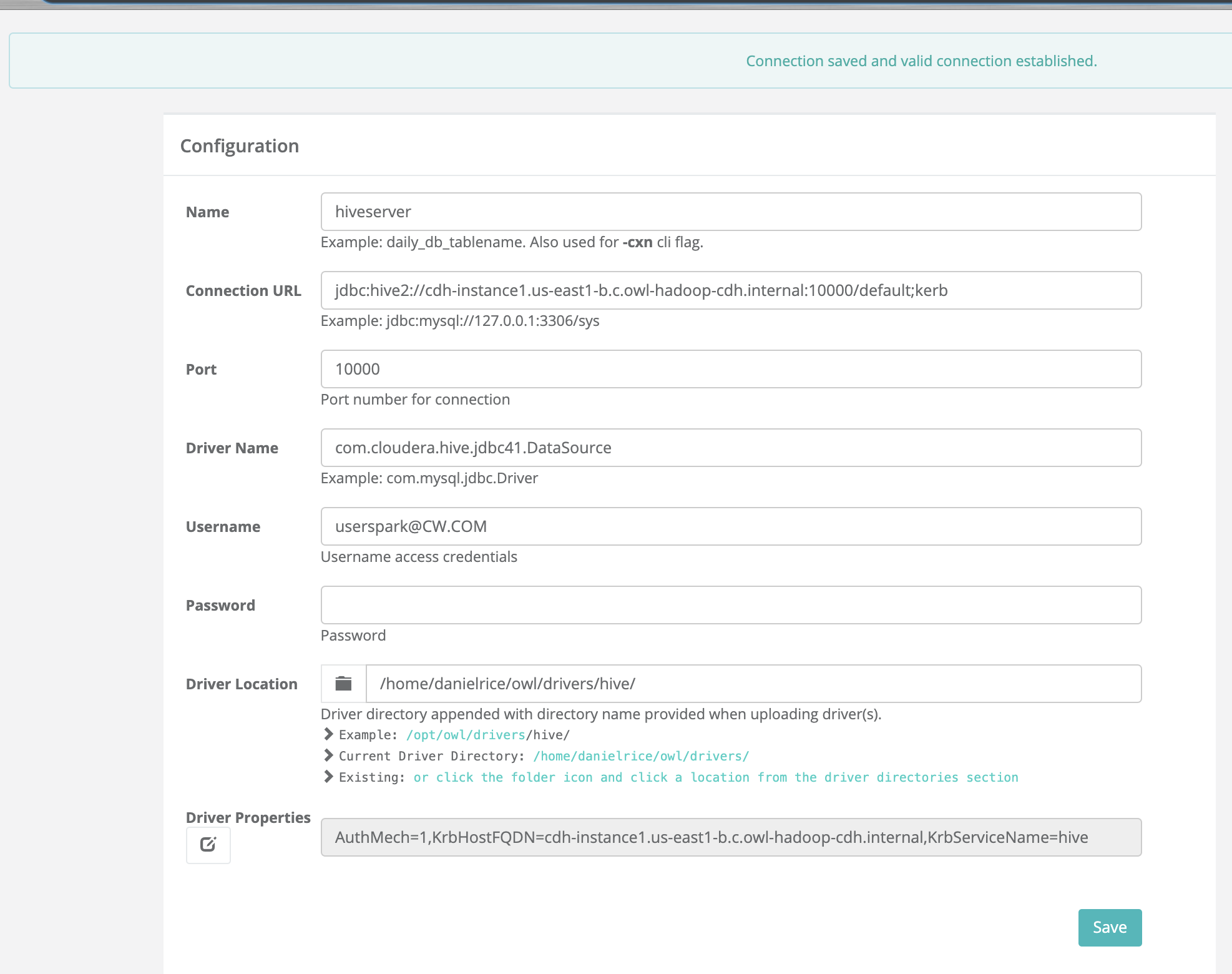

.png)

Test your hive connection via beeline to make sure it is correct before going further.

beeline -u 'jdbc:hive2://<HOST>:10000/default;principal=hive/[email protected];useSSL=true' -d org.apache.hive.jdbc.HiveDriverKerberos Example

jdbc:hive2://<HOST>:10000/default;principal=hive/[email protected];useSSL=trueConnecting DQ WebApp to Hive JDBC

Notice the driver properties for kerberos and principals.

In very rare cases where you can't get the jar files to connect properly one workaround is to add this to the DQ-web startup script.

$JAVA_HOME/bin/java -Dloader.path=lib,/home/danielrice/owl/drivers/hive/ \

-DowlAppender=owlRollingFile \

-DowlLogFile=owl-web -Dlog4j.configurationFile=file://$INSTALL_PATH/config/log4j2.xml \

$HBASE_KERBEROS -jar $owlweb $ZKHOST_KER \

--logging.level.org.springframework=INFO $TIMEOUT \

--server.session.timeout=$TIMEOUT \

--server.port=9001 > $LOG_PATH/owl-web-app.out 2>&1 & echo $! >$INSTALL_PATH/pids/owl-web.pidClass Not Found apache or client or log4j etc...

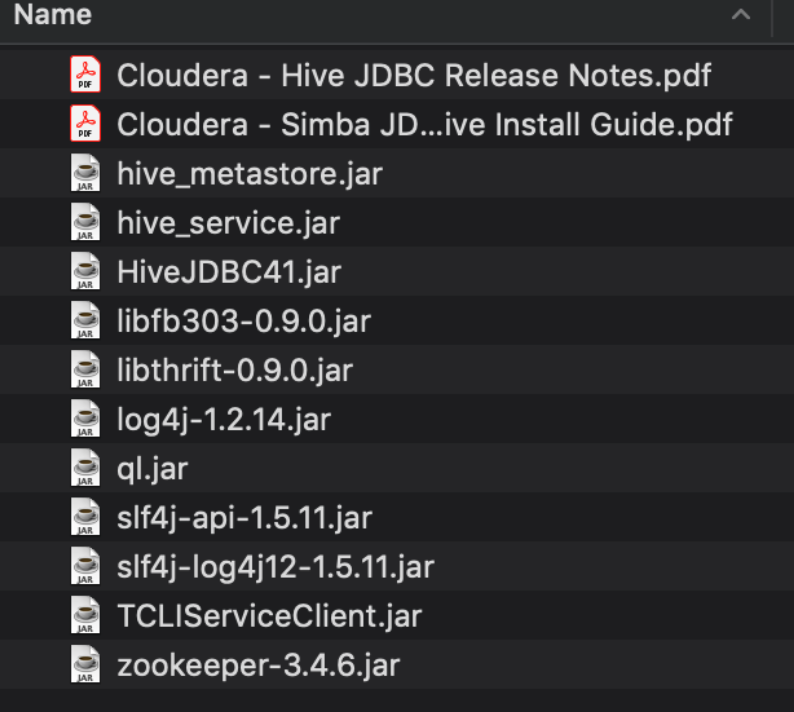

Any class not found error means that you do not have the "standalone-jar" or you do not have all the jars needed for the driver.

Hive JDBC Jars

It is common for Hive to need a lot of .jar files to complete the driver setup.

Java jar cmds

Sometimes it is helpful to look inside the jar and make sure it has all the needed files.

jar -tvf hive-jdbc.jar